Hi everyone! This is my first time posting here. I made a similar thread at gamedev.net, but unfortunately I got no response, so I will try my luck here instead! My problem is very similar to this topic from 10 years ago (since I’m new here I can’t post full links, so I will just give the topic info): Billboard geometry shader posted by Vexator with topic id 6561

However, the solution posted there redirects me to the khronos forums home page.

I will briefly describe the problem also posted at gamedev. Since I can’t post links, you can google search the thread by typing : Spherical Billboard of a Line Strip with Geometry Shader in OpenGL

I’m interested in rendering hair in real time using OpenGL, so I studied Nvidia’s presentation from SIGGRAPH 2010. In it, they suggest rendering each line segment as camera facing quad instead of the GL_LINE_STRIP primitive.

My camera is built following the tutorial found at the learnopengl page.

The camera class is:

class Camera{

public:

// camera Attributes

glm::vec3 Position;

glm::vec3 Front;

glm::vec3 Up;

glm::vec3 Right;

glm::vec3 WorldUp;

// euler Angles

float Yaw;

float Pitch;

// camera options

float MovementSpeed;

float MouseSensitivity;

float Zoom;

// ... (camera functions) ...

}

For the “eye vector”, I use the Front vector of the camera class. The line coordinates are passed in a VBO. Here is the Veretx Shader:

#version 430

layout (location = 0) in vec3 aPos;

layout (location = 1) in vec3 aColor;

layout (location = 2) in float aTransparency;

layout (location = 3) in float aSegmentIndex;

layout (location = 4) in float aThickness;

uniform vec3 camera_position;

uniform mat4 model;

uniform mat4 view;

out vec3 color;

out float transparency;

out VS_OUT{

float thickness;

vec3 eye_vector;

vec4 point;

}vs_out;

void main()

{

gl_Position = view* model * vec4(aPos, 1.0);

color = aColor;

vs_out.eye_vector = normalize(camera_position - gl_Position.xyz);

vs_out.thickness = aThickness;

vs_out.point = gl_Position;

transparency = aTransparency;

}

Each line has two points and each point has its own struct called vsout. In it, I store the normalized distance from the camera to the point (eye vector), and the thickness value. Each vertex is multiplied by the model_view matrix (view * model). The Geometry Shader is as follows:

#version 430

layout (lines) in;

layout (triangle_strip, max_vertices = 4) out;

uniform mat4 projection;

in VS_OUT{

float thickness;

vec3 eye_vector;

vec4 point;

}gs_in[];

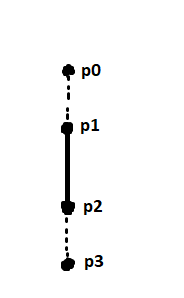

void main(){

float radius = gs_in[0].thickness / 2;

vec4 p1 = gs_in[0].point;

vec4 p2 = gs_in[1].point;

vec3 tangent = normalize(p1.xyz - p2.xyz);

vec3 expand_direction1 = normalize(cross(gs_in[0].eye_vector,tangent));

vec3 expand_direction2 = normalize(cross(gs_in[1].eye_vector,tangent));

vec3 Pos;

Pos = p1.xyz + expand_direction1 * radius;

gl_Position = projection * vec4(Pos, 1.0);

EmitVertex();

Pos = p1.xyz - expand_direction1 * radius;

gl_Position = projection * vec4(Pos, 1.0);

EmitVertex();

Pos = p2.xyz + expand_direction2 * radius;

gl_Position = projection * vec4(Pos, 1.0);

EmitVertex();

Pos = p2.xyz - expand_direction2 * radius;

gl_Position = projection * vec4(Pos, 1.0);

EmitVertex();

EndPrimitive();

}

First I compute the tangent as suggested in the paper by Nvidia. Then, I compute the cross product of the eye vector and the tangent for each point of the line. This cross product is the expand direction. Then, I compute the ammount of expansion for each point and multiply the result with the projection matrix (I use perspective projection).

Even though it looks good from a distance, there are some issues when I zoom in. There seem to be some discontinuities in each line segment, as if each segments rotates differently. All quads should face the camera, and the bottom of one quad should align with the top of the next (if that makes sense ).

You can see the pictures at the gamedev forum because it will still not let me upload an image.

I’ve tried many different things but nothing seems to work out for me. This is the closest I’ve been to a correct bilboard geometry shader, but the disconnecting lines and the weird rotation when the camera zooms in kinda ruins the whole implementation. Thank you for reading!