Hi folks,

I started learning opengl 3.3 and i clearly defined my triangle coordinates as:

#include "ref.h"

int main(void){

WindowInit(&window, "Test", WINW, WINH, SDL_WINDOW_SHOWN | SDL_WINDOW_OPENGL);

game = GameInit(60);

VAO vaodef = VAOInit(1);

VAOCreate(&vaodef);

VAOBind(&vaodef);

const GLfloat triangle_data[] = {

-1.0f, -1.0f, 0.0f,

1.0f, -1.0f, 0.0f,

0.0f, 1.0f, 0.0f,

};

Mesh triangle = MeshInit();

triangle.Load(&triangle, triangle_data, vsize(triangle_data), 1);

triangle.index = 0; /* Vertex Attrib Index */

Shader triangle_shader = ShaderInit();

triangle_shader.Load(&triangle_shader, "TriangleVertexShader.glsl", "TriangleFragmentShader.glsl");

while(game.running == true){

game.frameStart = SDL_GetTicks();

glc(0x00, 0x00, 0x00, 0x00);

if(SDL_PollEvent(&game.ev) != 0){

if(game.ev.type == SDL_KEYDOWN){

switch(GetInput()){

case SDL_QUIT:

game.running = false;

break;

}

}

}

triangle_shader.Use(&triangle_shader);

triangle.Draw(&triangle);

glUseProgram(0);

glswap;

game.frameTime = SDL_GetTicks() - game.frameStart;

handleFPS();

}

WindowDestroy(&window);

}

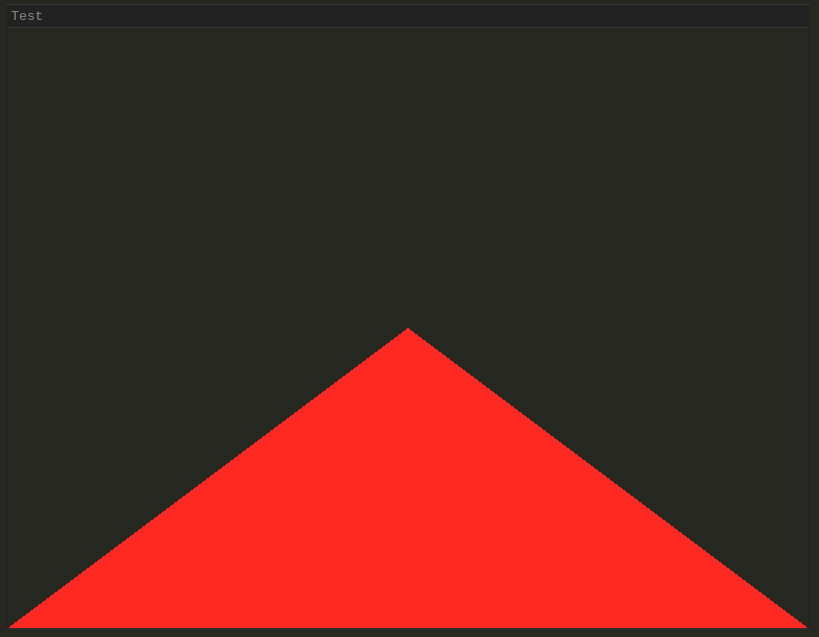

But output is this:

How can i fix this? Upper triangle edge should be high to the top of the window…

EDIT: i forgot shaders…

Vertex Shader

#version 330 core

layout(location = 0) in vec3 aPos;

void main(){

gl_Position = vec4(aPos, 1.0);

}

Fragment Shader

#version 330 core

out vec3 color;

void main(){

color = vec3(1,0,0);

}