For a project I am working I am trying to produce raytraced shadows where I cast a ray out from an object into a light direction to see if its in shadow. However I am producing strange bugs where the orientation of the camera seems to change the shadows.

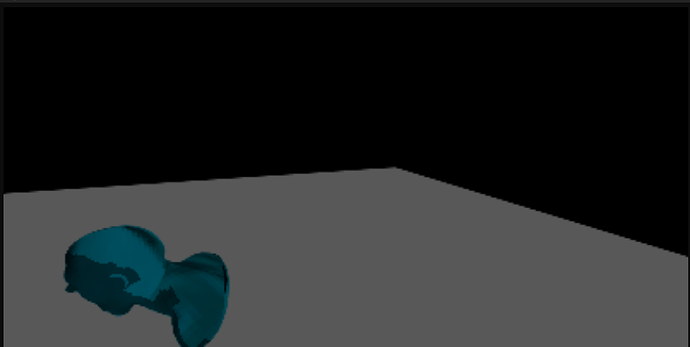

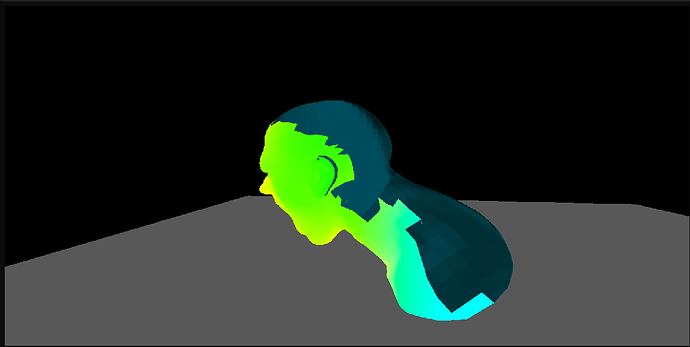

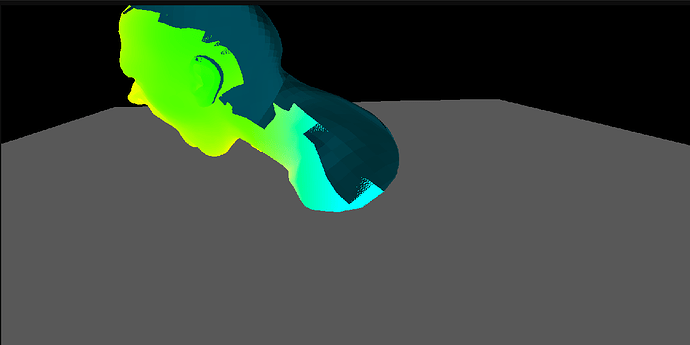

in this image you can see where the shadows are being produced however in this next image if I look in a different direction.

the shadow on the model has now changed. At first I thought this would have been an issue with how I reconstruct the world space from depth buffer however I couldn’t find any issues there.

RayPayload DispatchShadowRay(in ivec2 Coordinate, in vec3 LightDirection)

{

RayPayload Payload;

Payload.Valid = true;

Payload.InLight = true;

vec2 NormalizedCoord = (vec2(Coordinate) + vec2(0.5)) / imageSize(ColorBuffer);

float DepthValue = texture(DepthBuffer, NormalizedCoord).r;

if(DepthValue >= 1.0)

return Payload;

vec4 ViewSpace = InverseProjection * vec4(NormalizedCoord * 2.0 - 1.0,

DepthValue * 2.0 - 1.0, 1.0);

ViewSpace /= ViewSpace.w;

vec4 WorldSpace = InverseView * ViewSpace;

Payload.Origin = WorldSpace.xyz;

Payload.Direction = normalize(-LightDirection);

Payload.Origin += Payload.Direction * 0.001;

TraceRay(Payload);

return Payload;

}

I have been trying to use Nsight to debug this however I haven’t found it very useful.

My main questions are:

-

Are there any obvious bugs/mistakes that I may have missed or not checked?

-

How would I go about continuing to debug this problem, are there any other tools which may

provide more insight? I have tried renderdoc but it seems to just crash so I haven’t been able

to use that.