Hello! I ran into a problem with writing shader code correctly.

I used to always write shaders like this:

- Vertex shader:

#version 330

in vec3 in_vert;

in vec4 in_color;

out vec4 o_color;

out float distance;

void main() {

vec3 rpos = in_vert;

gl_Position = vec4(rpos.x, rpos.y, rpos.z, rpos.z);

o_color = in_color;

distance = rpos.z;

}

- Fragment shader:

#version 330

in vec4 o_color;

in float distance;

out vec4 f_color;

void main() {

f_color = o_color;

gl_FragDepth = distance;

}

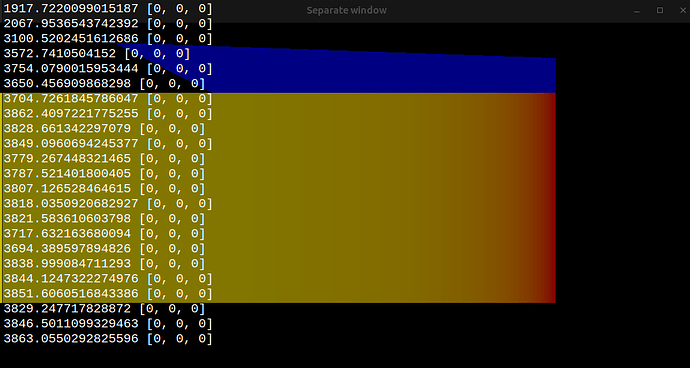

But it soon became clear that using gl_FragDepth seriously reduces performance (up to 10 times in the worst case).

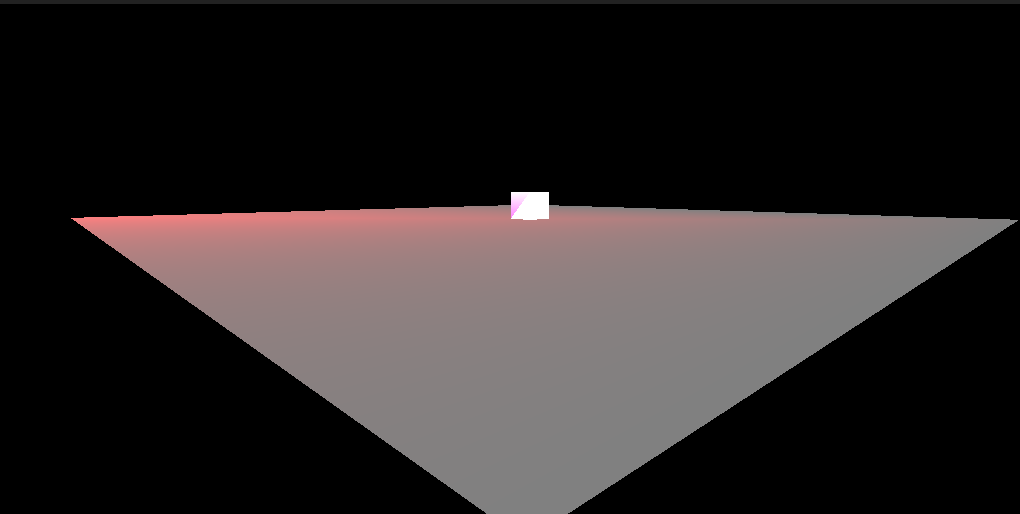

I’ve read that you usually need a projection matrix when creating a perspective, but I didn’t like the presence of a near plane in it.

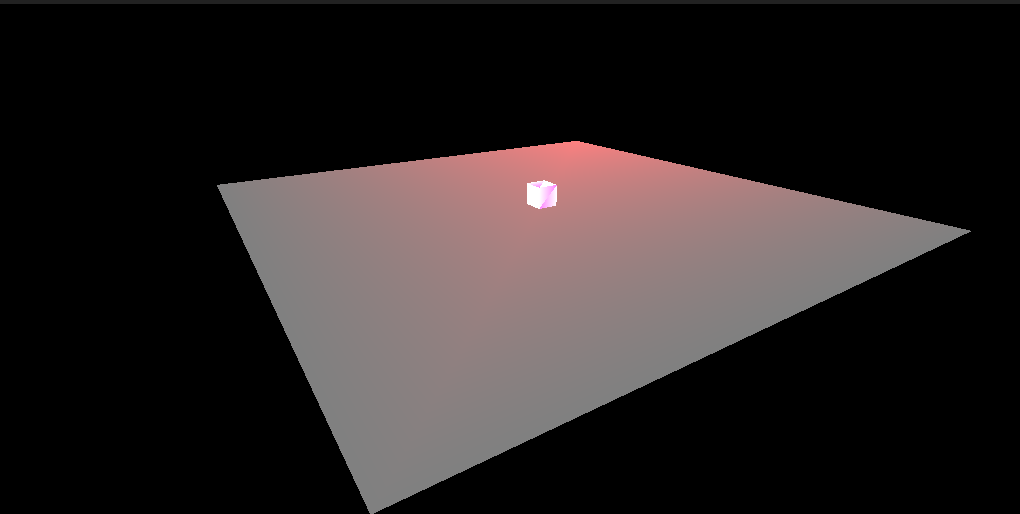

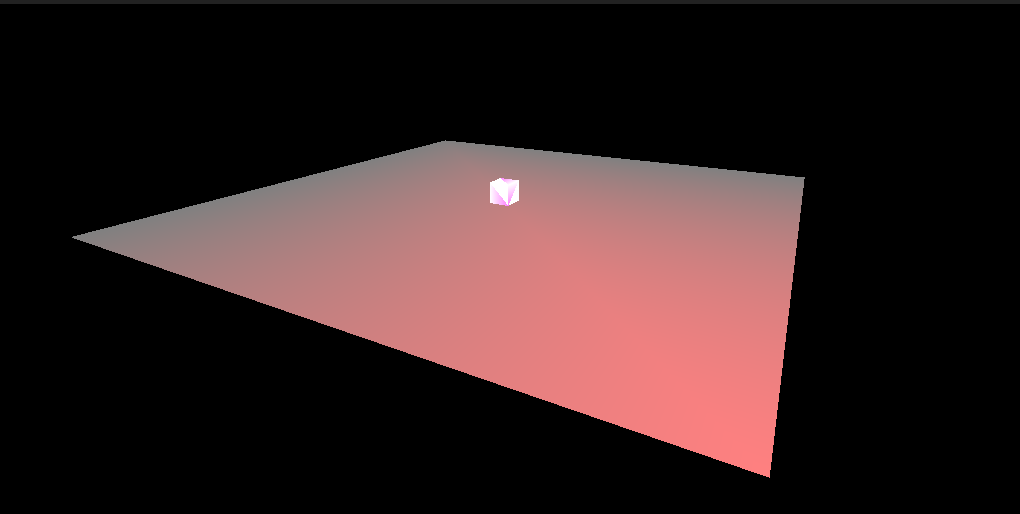

I just want all objects to be drawn between 0 and 1 in the Z coordinate, and the perspective matrix just doesn’t allow me to achieve the same result. Perhaps only within 0.000001 to 1, but it does not suit me at all.

The whole problem here is concentrated in gl_Position in the vertex shader.

I need to do all the transformations in gl_Position to get a perspective projection without a projection matrix.

I tried to do the following in the vertex shader:

gl_Position = vec4(rpos.x, rpos.y, pow(rpos.z, 2.0)*(rpos.z/abs(rpos.z)), rpos.z);

However, the problem was not solved and there were many errors with the DEPTH_TEST parameter enabled when approaching an object.

Does anyone know how to create perspective with gl_Position at [0 <= Z <= 1] ?