I’m having a little trouble with fully understanding transforming to other spaces. I’m attempting to implement normal maps, and my process might not be the most efficient, but I’m trying to learn how to piece it together. I’m bringing in my matrices and vertex data into my vertex shader

#version 330 core

layout(location = 0) in vec3 aPosition;

layout(location = 1) in vec3 aNormal;

layout(location = 2) in vec2 aUvCoord;

layout(location = 3) in float aBoneGroup;

out mat4 f_model;

out mat4 f_view;

out VS_OUT

{

vec3 normal_cameraSpace;

vec3 normal_worldSpace;

vec3 normal_modelSpace;

vec2 uvCoord;

vec3 position_cameraSpace;

vec3 position_worldSpace;

vec3 position_modelSpace;

} vs_out;

layout(std140) uniform Transforms

{

mat4 projection;

mat4 view;

};

uniform mat4 model;

void main()

{

vec4 local = vec4(aPosition, 1.0);

mat4 _mv = view * model;

vec4 _position_worldSpace = model * local;

vec4 _position_cameraSpace = view * _position_worldSpace;

gl_Position = projection * _position_cameraSpace;

vs_out.normal_modelSpace = aNormal;

vs_out.normal_worldSpace = mat3(transpose(inverse(model))) * aNormal;

vs_out.normal_cameraSpace = mat3(transpose(inverse(_mv))) * aNormal;

vs_out.uvCoord = aUvCoord;

vs_out.position_modelSpace = local.xyz;

vs_out.position_worldSpace = _position_worldSpace.xyz;

vs_out.position_cameraSpace = _position_cameraSpace.xyz;

f_view = view;

f_model = model;

}

I’ve got some redundant outputs as I’m trying multiple solutions to try to get this working. I then calculate my TBN matrix in my geometry shader:

#version 330 core

layout(triangles) in;

layout(triangle_strip, max_vertices = 3) out;

in mat4 f_model[];

in mat4 f_view[];

in VS_OUT

{

vec3 normal_cameraSpace;

vec3 normal_worldSpace;

vec3 normal_modelSpace;

vec2 uvCoord;

vec3 position_cameraSpace;

vec3 position_worldSpace;

vec3 position_modelSpace;

} vs_out[3];

out mat4 g_view;

out mat4 g_model;

out GS_OUT

{

mat3 TBN_worldSpace;

mat3 TBN_cameraSpace;

vec3 normal_cameraSpace;

vec3 normal_worldSpace;

vec2 uvCoord;

vec3 position_cameraSpace;

vec3 position_worldSpace;

vec3 position_modelSpace;

} gs_out;

void main() {

vec3 edge1 = vs_out[1].position_modelSpace.xyz - vs_out[0].position_modelSpace.xyz;

vec3 edge2 = vs_out[2].position_modelSpace.xyz - vs_out[0].position_modelSpace.xyz;

vec2 deltaUv1 = vs_out[1].uvCoord - vs_out[0].uvCoord;

vec2 deltaUv2 = vs_out[2].uvCoord - vs_out[0].uvCoord;

float f = 1.0 / (deltaUv1.x * deltaUv2.y - deltaUv1.y * deltaUv2.x);

vec3 _tangent_modelSpace;

vec3 _biTangent_modelSpace;

_tangent_modelSpace = (edge1 * deltaUv2.y - edge2 * deltaUv1.y) * f;

//_tangent.x = f * (deltaUv2.y * edge1.x - deltaUv1.y * edge2.x);

//_tangent.y = f * (deltaUv2.y * edge1.y - deltaUv1.y * edge2.y);

//_tangent.z = f * (deltaUv2.y * edge1.z - deltaUv1.y * edge2.z);

_biTangent_modelSpace = (edge2 * deltaUv1.x - edge1 * deltaUv2.x) * f;

//_biTangent.x = f * (-deltaUv2.x * edge1.x + deltaUv1.x * edge2.x);

//_biTangent.y = f * (-deltaUv2.x * edge1.y + deltaUv1.x * edge2.y);

//_biTangent.z = f * (-deltaUv2.x * edge1.z + deltaUv1.x * edge2.z);

mat3 _mv = mat3(f_view[0] * f_model[0]);

vec3 _T = _mv * normalize(_tangent_modelSpace);

vec3 _B = _mv * normalize(_biTangent_modelSpace);

vec3 _N = _mv * normalize(vs_out[0].normal_modelSpace);

gs_out.TBN_cameraSpace = mat3(_T, _B, _N);

g_view = f_view[0];

g_model = f_model[0];

for (int i = 0; i < 3; ++i)

{

gl_Position = gl_in[i].gl_Position;

gs_out.normal_cameraSpace = vs_out[i].normal_cameraSpace;

gs_out.normal_worldSpace = vs_out[i].normal_worldSpace;

gs_out.uvCoord = vs_out[i].uvCoord;

gs_out.position_cameraSpace = vs_out[i].position_cameraSpace;

gs_out.position_worldSpace = vs_out[i].position_worldSpace;

EmitVertex();

}

}

I’ve tried following several different tutorials that calculated it differently, but in this case I tried to calculate the TBN values in model space then convert to camera space for the final mat3 output. Lastly in my fragment shader:

#version 330 core

out vec4 FragColor;

in mat4 g_view;

in mat4 g_model;

in GS_OUT

{

mat3 TBN_worldSpace;

mat3 TBN_cameraSpace;

vec3 normal_cameraSpace;

vec3 normal_worldSpace;

vec2 uvCoord;

vec3 position_cameraSpace;

vec3 position_worldSpace;

vec3 position_modelSpace;

} gs_out;

struct Material

{

sampler2D diffuse;

sampler2D emission;

sampler2D normal;

uint shininess;

float specularModifier;

bool hasNormal;

};

//...

uniform Material material;

//...

void main()

{

vec3 _Normal_cameraSpace = gs_out.normal_cameraSpace;

vec2 _UvCoord = gs_out.uvCoord;

vec3 _FragPosition_cameraSpace = gs_out.position_cameraSpace;

vec3 _FragPosition_worldSpace = gs_out.position_worldSpace;

mat4 _View = g_view;

mat4 _Model = g_model;

vec4 _diffuseTexel = texture(material.diffuse, _UvCoord);

vec4 _normalTexel = texture(material.normal, _UvCoord);

// Flip normal if we're viewing the back of a tri

if (!gl_FrontFacing)

{

_Normal_cameraSpace = -_Normal_cameraSpace;

}

vec3 _totalDirect = vec3(0.0, 0.0, 0.0);

vec3 _totalAmbient = vec3(0.0, 0.0, 0.0);

vec3 _totalSpecular = vec3(0.0, 0.0, 0.0);

for (/* for each light */)

{

float attenuation = 1.0;

vec3 _lightDirect;

vec3 _lightAmbient = vec3(0.0, 0.0, 0.0);

vec3 _lightSpecular = vec3(0.0, 0.0, 0.0);

vec3 _lightPosition_worldSpace = lights[i].position.xyz;

vec3 _lightPosition_cameraSpace = vec3(_View * vec4(_lightPosition_worldSpace, 1.0));

vec3 _lightDirection_cameraSpace = normalize(_lightPosition_cameraSpace - _FragPosition_cameraSpace);

//...

//******** Direct lighting ********

float _diff;

if (material.hasNormal)

{

vec3 textureNormal_tangentSpace = normalize(_normalTexel.rgb * 2.0 - 1.0);

_diff = max(dot(textureNormal_tangentSpace, gs_out.TBN_cameraSpace * _lightDirection_cameraSpace), 0.0);

}

else

{

_diff = max(dot(_Normal_cameraSpace, _lightDirection_cameraSpace), 0.0);

}

// v-- intensity v-- intensity modifier

_lightDirect = lights[i].colour.xyz * lights[i].properties.x * lights[i].properties.w * _diff;

//...

_lightAmbient *= attenuation;

_lightDirect *= attenuation;

//_lightSpecular *= attenuation;

//_totalAmbient += _lightAmbient;

_totalDirect += _lightDirect;

//_totalSpecular += _lightSpecular;

}

//...

FragColor = vec4(_totalDirect + _totalAmbient + _totalSpecular + _totalEmission, 1.0) * _diffuseTexel;

}

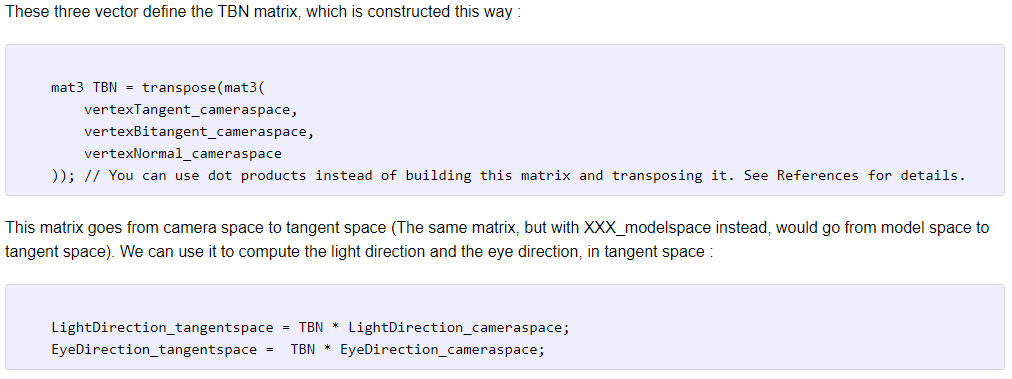

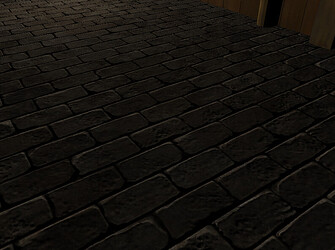

If there is a normal map for the fragment, I calculate the _diff using the textureNormal rgb values and the _lightDirection_cameraSpace converted to tangent space by way of TBN_cameraSpace. The result is that I get shading that is affected by the current camera angle, inconsistent with the direction of the actual light source.

I know there is a lot of room to improve how and where I’m making these calculations, but if anyone could point out the specific error I’m making in my conversions/calculations I would greatly appreciate it, thanks!