Hi,

I’m studying the root cause of some perfomance hit on a particular case that I’m having which I believe has something to do with the hardware fillrate / overdraw limitations. Nevertheless, I still want to dedicate some time into improving (or find alternatives) for a solution to mitigate this behaviour:

So I have a scene with:

-

30 cubes:

- Each one composed of 4 faces, each with 2 triangles and drawn with

glDrawElements(GL_TRIANGLES, indicesCount, GL_UNSIGNED_INT, 0);

- Each one composed of 4 faces, each with 2 triangles and drawn with

- Viewport resolution of 1920 x 1027

- Deferred shading (6 color attachments)

- 3 Render Passes (geometry + screen shading + screen post processing)

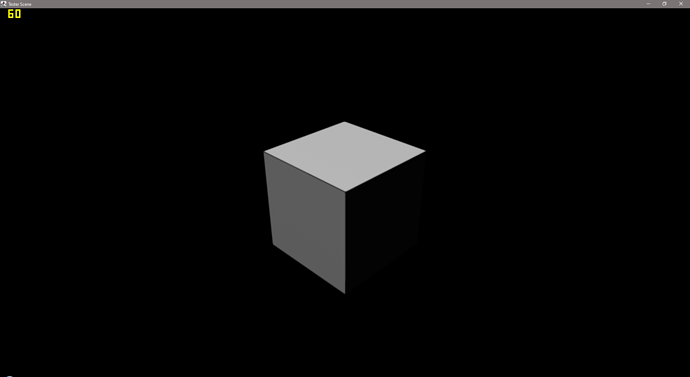

and when rendering those 30 objects at once in the same position, with a good camera distance from them, I get 60 fixed FPS, with a low GPU usage (~40%)

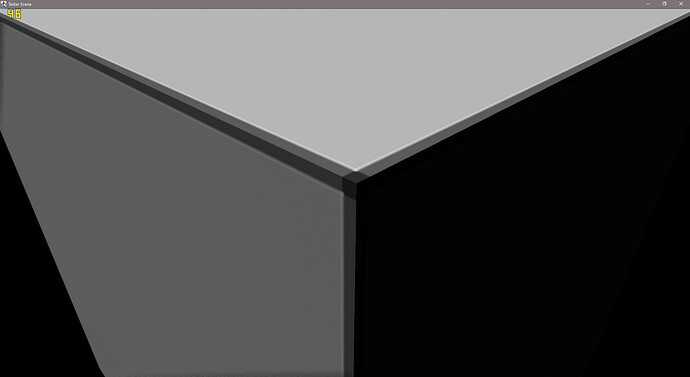

but when rendering those 30 objects at once in the same position, with a close camera distance, I get around 40 FPS, with a high GPU usage (~90%)

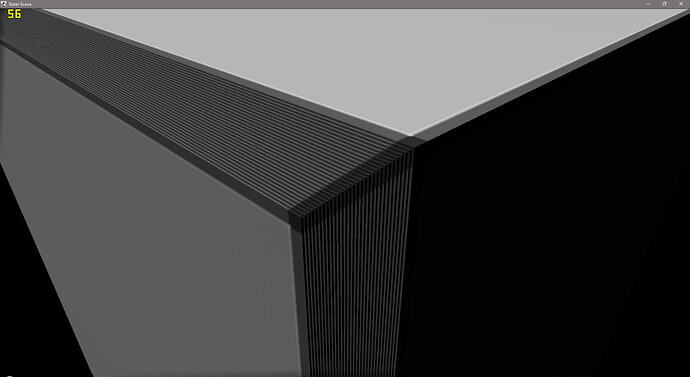

The same distance but with a small separatino between the cubes, I get a little more frames, but still a high GPU usage.

I only want to share this behaviour here in order to discuss it further so I can find some good viewpoints on how to go around it, becuase this could impact when rendering scenes where some particles (compsed of billboarded sprites come in close distance with the camera, or when using transparency with multiple layered objects at once).

Is there any name or known way of dealing with this, because I haven’t found anything while searching for it.

regards,

Jakes