I’m trying to implement the OpenCV fisheye camera model in OpenGL shader to increase the render speed, I’m following OpenCV’s documentation. But the result I get still has some distortion.

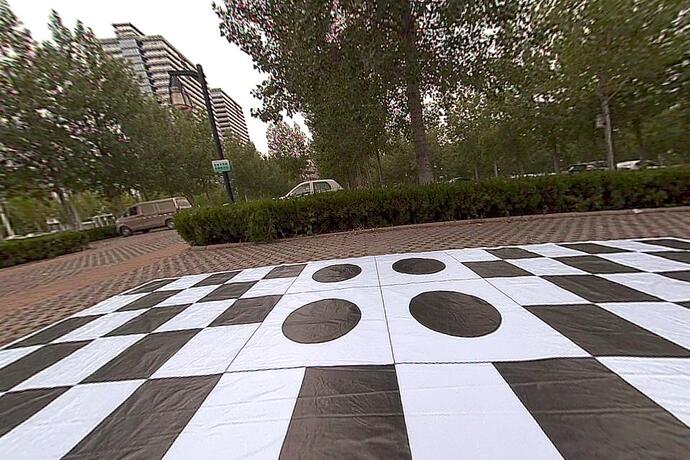

Here is the result I get from my OpenGL shader(don’t worry about the scale issue):

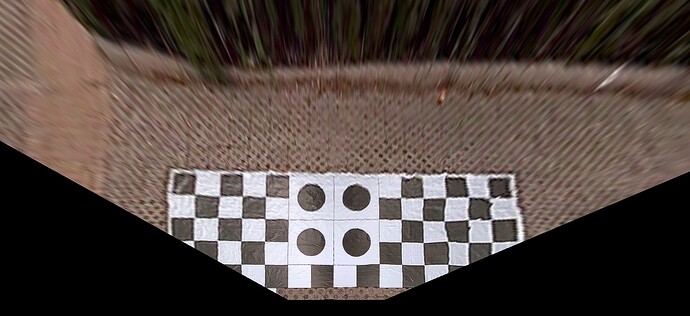

And here is the result I get from OpenCV initUndistortRectifyMap and remap with exactly the same camera matrix.

It’s easy to find out that the shader result was still distorted a little bit, and the distortion would be more visible if I continue processing the perspective transformation, the following is the comparison between OpenGL shader perspective result and OpenCV warpPerspective result.

The perspective transformation in my shader has proved to be correct by passing the OpenCV undistorted image to the shader. So the problem should be with the undistortion part in my shader, here is the undistortion part of my shader:

void main() {

float i = textureCoords.x * windowWidth;

float j = textureCoords.y * windowHeight;

float xc = (i - cameraMatrix[0][2]) / cameraMatrix[0][0];

float yc = (j - cameraMatrix[1][2]) / cameraMatrix[1][1];

float r = sqrt(xc*xc + yc*yc);

float degree = atan(r);

float rd = degree + distortVector[0]*pow(degree,3) + distortVector[1]*pow(degree,5) + distortVector[2]*pow(degree,7) + distortVector[3]*pow(degree,9);

float scale = rd / r;

float x = xc * scale;

float y = yc * scale;

float u = cameraMatrix[0][0]*(x+0.1*y) + cameraMatrix[0][2];

float v = cameraMatrix[1][1]*y + cameraMatrix[1][2];

vec2 coords = vec2(u/textureWidth, v/textureHeight);

if (coords.x>=0.0 && coords.x<=1.0 && coords.y>=0.0 && coords.y<=1.0) {

gl_FragColor = texture(inputTexture, coords);

} else {

gl_FragColor = vec4(0.0,0.0,0.0,0.0);

}

}

Any ideas? please let me know