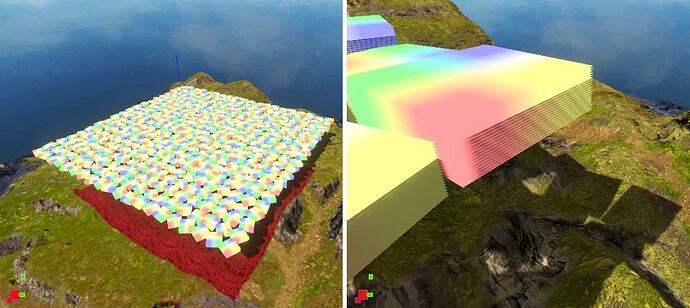

First, I sandboxed the problem even more. Now the scene is 400 instances. The model is 20 quads and each quad is 2 triangles.

I tried to render differently arranged quads: from top to bottom and vice versa. No difference in FPS or stalls. Looks reasonable because depth test and (probably) write after fragments shader could be not just a write operation, but an atomic read-modify-write.

The terrain under quads is not that hard to render because it’s cached while the camera does not move. It does not get rendered, it only gets blitted in a few calls.

Also I tested how vertex processing impacts FPS:

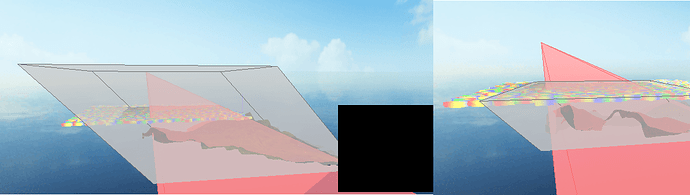

- Render quad models with disabled frustum culling while not looking at them, so all vertices get processed, but primitives get clipped.

- Render the same scene without models.

There is not difference between these two setups.

Backface culling enabled?

SCISSOR_TEST, STENCIL_TEST, BLEND all disabled?

GL_POLYGON_OFFSET_FILL disabled?

PolygonMode FRONT_AND_BACK = FILL?

GL_MULTISAMPLE and POLYGON_SMOOTH disabled?

Yes for all these, I meant it under “rasterizer and pix ops state” in the initial post.

Other options are more interesting.

DepthFunc LESS not LEQUAL?

In the initial test it was LEQUAL, however on the new scene with quads there should be no difference between them. Ok, I switched to LESS and measured no difference in fps and profiler.

ALPHA_TEST

So ancient that there is no such field in frame debugger

Nevertheless, I disabled it manually and got no difference.

ColorMask(0,0,0)? StencilMask(0)?

They were enabled as I assumed it’s OK because shadowmap has stencil and no color attachments. Again disabled explicitly for test, nothing changed.

All of the above are “not” changed between glClear( DEPTH ) and the end of shadow render?

As I mentioned above, the terrain is cached and gets blitted to the shadowmap before any other rendering. It works perfect in our camera restrictions, so there is no glClear for shadowmap.

Nevertheless, I ensured that all the state changes you advised are made before blitting.

So between blitting and the drawcall there are only VAO binding, some SSBO bindings, a few glUniform* calls and texture bindings.

16-bit indices?

Surely. And not 8 bit because many AMD drivers complain about performance issues with 8 bit indices to debug output.

Could be, on your specific GPU+driver.

Maybe, but initial problem with trees appeared on a wide variety of Nvidia and AMD gpus. Here I’m not sure if it’s the same problem on all gpus.

How does the UE depth prepass affect shadow gen render? Or does it?

It does not affect. It prepasses the scene from the view camera before shadow rendering, but does not sample the prepassed depth from shadowmap generating program.

I mentioned the prepass just to point the difference between my and UE’s color pass.

I wonder if UE is doing some implicit small feature culling.

Looks like it’s not doing. Closely viewed colored trees and furthest slice (i.e. less detailed) shadowmap trees have the same amount of vertices. If it can, it definitely needs explicit enabling and tuning.

That’s worth testing. Though I’d suspect you’re getting good texture cache hits.

Texture in the old scene was 256x256, and the texture in the new scene is 4x4. Both with mipmaps. 4x4 should reside in texture cache entirely on any hardware.

Is the alpha in your renderer’s texture MIPmaps computed the same way as in UE?

Good point. No, not the same way. I just compare alpha to 0.5, and UE does some more arithmetics (still in one line of code), which I didn’t look into deeply.

Could be. But in your image above, you’re looking at a top-down view. So all the trees are probably being plastered into the same split

With no tuning UE draws the scene with an ugly seam between shadow cascades which is well visible in moving camera, so first I tuned the camera to see 2 or 3 seams, and then verified that 3 or 4 splits are drawn into with frame debugger.

You could force UE to always use one split.

I tried, but unsuccessfully for now, will continue later. To be more specific, I made UE to use one split, but it looked like the less detailed one, because it rendered all the scene to only a small chunk of the shadowmap, and I didn’t find yet how to tune shadow bounding volume.

Syncing the shadow gen near/far between UE and your renderer may be very important for depth precision and fragment acceptance/rejection rate.

Good point too! I didn’t compare to UE yet, but made anothe experiment.

In my renderer it’s possible to “freeze” the shadow volume and look at it from aside. On the screenshots shadow near and far planes are horizontal planes of the grey volume, that is parallel to model’s quads and almost parallel to the sight vector. Left screenshot is what they were initially, and the right one is after tuning. I think both variants are good enough for 16 bits. For example, in the right screenshot the distance between shadow’s near and far is approximately 200, and distance between model’s quads is 5.

Could provide some useful clues

GL_FRAGMENT_SHADER_INVOCATIONS_ARB should be the same as Shaded fragments from the first post. In the first post I was talking about 140-150M of shaded fragments, while in the new sandbox I see the effect (fps drop and drain stall) on 50-60M of shaded fragments. The only difference of less fragments is that absolute FPS is higher.

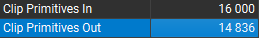

I payed attention at GL_CLIPPING_INPUT_PRIMITIVES and GL_CLIPPING_OUTPUT_PRIMITIVES in the debugger from the very beginning, and it seems to be OK.

UE’s out/in ratio is usually lower.

Fow now I didn’t try only few things you advised:

- Bake instances into a single pseudo-instanced model.

- Make UE fill the whole shadowmap with one slice, and try to sync near-far distance in UE and my engine.

Will do it a bit later and report the results.