Hello,

I’m trying to work on a modeler for basic shapes (Cubes, Icospheres, Cones, etc…) in a way that only the mesh scruture is affected when transforming the object.

As such:

I have a cube object of1 unit in length (so 0.5, 0.5, 0.5 vetices) with a full image mapping for each side (0.0, 1.0 UV) and for any scaling performed onto it, I want to keep (adjust) the UVs to be the same relative to the world around, in a way that I before the transformation the texture had 1x1 on the right side of the cube, after scaling 2x on Y, the right side should have now 1x2 tiled.

So far I managed to scale the texture using the same transformation matrix as the model matrix and apply it to the texture coordinate on the vertex shader.

The issue here is that the texture only has 2 coordinates, but the model has 3, so I am only able to transform correctly 2 of the coordinates correctly (x and y = U and V).

I was trying to find for a way to correctly transform (Project?) the 3D axis onto the 2D map in order to keep it visually correct, so far these are my results:

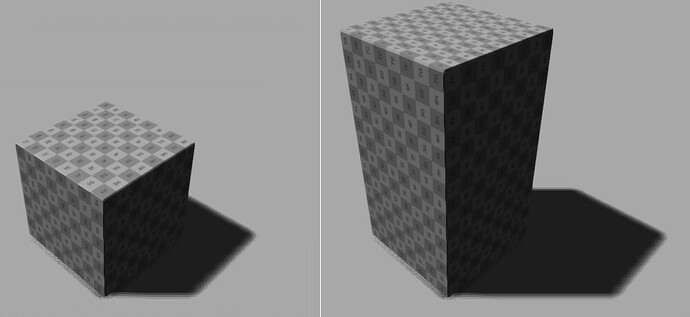

Side View:

(here it works fine because coincidentally the Y axis in the world coordinates matches the V axis in the texture coordinates)

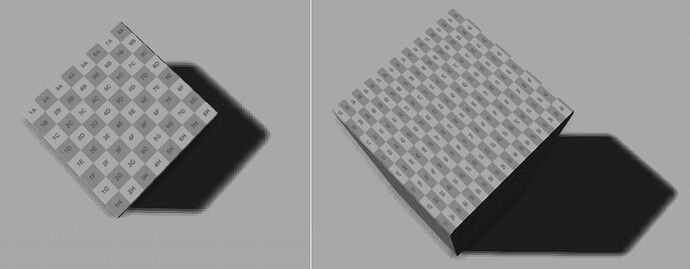

Top View:

(when scaling in the Y axis, the top view gets distorted because the V axis is getting wongly transformed along with the Y axis)

VERTEX

gl_Position = modelViewProjMat * vert;

v_TexCoord = vec2(modelMat * vec4(texCoord, 1.0, 1.0));

and then I’m using the v_TexCoord normally to sample the texture.

Is there any way of projecting (or applying the same 3D element transformations onto the texture in order to keep the world coordinates fixed for the map? (without using 3D textures)

Regards,

Jakes