I have implemented a depth pre-pass into my engine, which works well, except if I want to enable MSAA.

What I need:

- fill the default depth buffer with multisampled pre-pass depths

- have the (resolved) depth pre-pass data in a texture to use it for SSAO.

What I have tried:

- depth pre-pass into a multisampled FBO with texture attachement, then glBlitFramebuffer to the default depth buffer: final image have jagged edges

- depth pre-pass into the default depth buffer directly: works perfectly, but then I can’t manage to blit the depth map from the default depth buffer to another FBO with texture attachement, for later use.

I have found that the multisampled FBO is well multisampled:

- if I blit it with glBlitFramebuffer for visualization, it does not appear antialiased.

- but, if I resolve it with a shader (averaging samples values), it appears antialiased.

So, the problem seems to lie in the copy stage:

- when I use glBlitFramebuffer to copy the multisampled FBO into a normal one for visualization, it does not resolve the samples

- when I use glBlitFramebuffer to copy the multisampled FBO to the default depth buffer, it does not copy multisampled data successfully, whereas the result is perfect if I avoid the copy by directly rendering the depth pre-pass into the default depth buffer.

Here is the code for the first example (multisampled FBO with texture attachement, then glBlitFramebuffer).

Depth pre-pass framebuffer creation:

GLCall(glGenFramebuffers(1, &cameraDepthMapFBO));

GLCall(glGenTextures(1, &cameraDepthMapTexture));

GLCall(glBindTexture(GL_TEXTURE_2D_MULTISAMPLE, cameraDepthMapTexture));

GLCall(glTexImage2DMultisample(GL_TEXTURE_2D_MULTISAMPLE, ANTI_ALIASING_SAMPLES, GL_DEPTH_COMPONENT,

SCR_WIDTH, SCR_HEIGHT, GL_TRUE));

GLCall(glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_NEAREST));

GLCall(glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_NEAREST));

GLCall(glBindFramebuffer(GL_FRAMEBUFFER, cameraDepthMapFBO));

GLCall(glFramebufferTexture2D(GL_FRAMEBUFFER, GL_DEPTH_ATTACHMENT, GL_TEXTURE_2D_MULTISAMPLE, cameraDepthMapTexture, 0));

GLCall(glDrawBuffer(GL_NONE));

GLCall(glReadBuffer(GL_NONE));

if (glCheckFramebufferStatus(GL_FRAMEBUFFER) != GL_FRAMEBUFFER_COMPLETE)

std::cout << "Early depth test Framebuffer not complete!" << std::endl;

GLCall(glBindFramebuffer(GL_FRAMEBUFFER, 0));

Here I render the depth pre-pass on the FBO:

GLCall(glViewport(0, 0, SCR_WIDTH, SCR_HEIGHT));

GLCall(glBindFramebuffer(GL_FRAMEBUFFER, cameraDepthMapFBO));

GLCall(glClear(GL_DEPTH_BUFFER_BIT));

// All draw commands

GLCall(glBindFramebuffer(GL_FRAMEBUFFER, 0));

Here I copy the early-z data into the default depth buffer, and then render the scene:

GLCall(glClearColor(0.01f, 0.01f, 0.01f, 1.0f));

GLCall(glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT | GL_STENCIL_BUFFER_BIT));

GLCall(glViewport(0, 0, SCR_WIDTH, SCR_HEIGHT));

// Copy early-z data into default depth buffer

GLCall(glBindFramebuffer(GL_READ_FRAMEBUFFER, cameraDepthMapFBO));

GLCall(glBindFramebuffer(GL_DRAW_FRAMEBUFFER, 0));

GLCall(glBlitFramebuffer(0, 0, SCR_WIDTH, SCR_HEIGHT, 0, 0, SCR_WIDTH, SCR_HEIGHT, GL_DEPTH_BUFFER_BIT, GL_NEAREST));

glDepthFunc(GL_LEQUAL);

// All draw commands

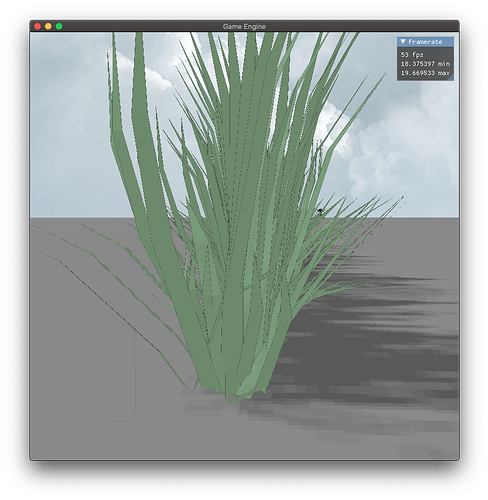

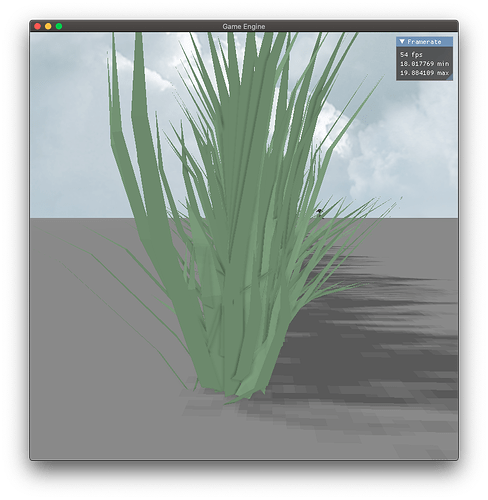

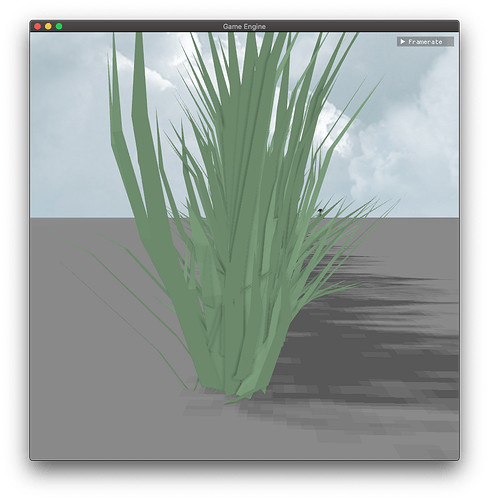

Here is the result: jagged edges caused by depth map aliasing.

Any idea would be greatly appreciated ![]()