System info:

Debian 10 Linux, Nvidia Driver 440.100, on a GTX 1650.

It is an nvidia-optimus system and i am using nvidia-xrun to engage the dedicated gpu instead of the intel one.

When rendering usign the intel gpu, there is no corruption.

However when I am using the nvidia gpu, corruptions appear when reading and writing to a framebuffer in sucession.

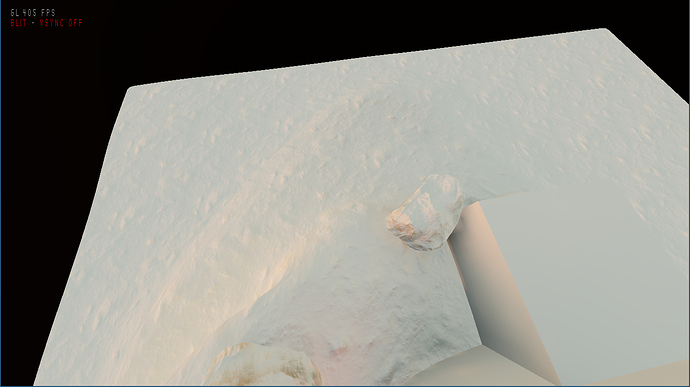

Here is the renderer in a “working state” on nvidia.

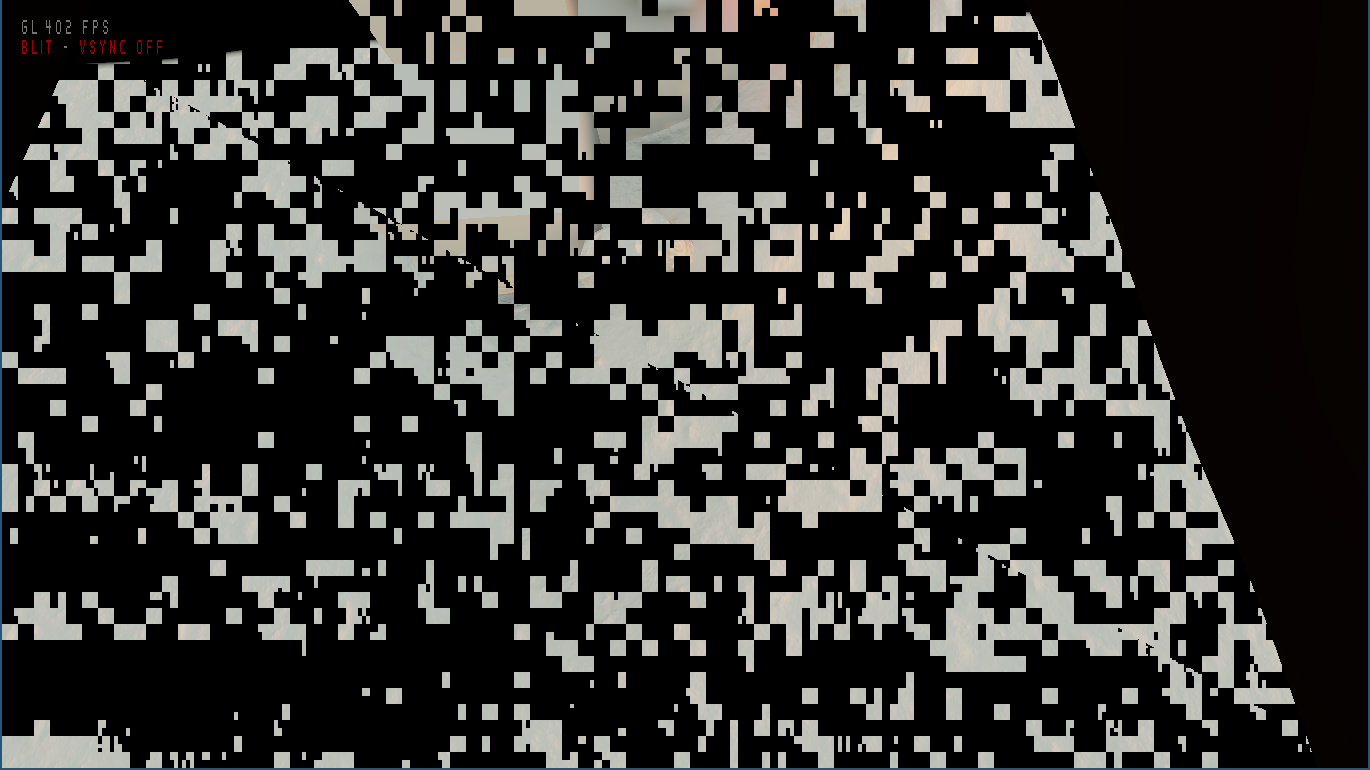

Here is the graphical corruption when running a simple passthrough shader:

The passthrough shader simply samples from a buffer that has already been written to.

this is accomplished by these lines in the shader.

layout (location = 0) out vec3 fragColor;

layout(binding=0) uniform sampler2D irradianceMap;

fragColor = vec3(texture(irradianceMap, gl_FragCoord.xy*invWindow).xyz);

This corruption does not appear when running the unigine superposition benchmark or the unigine forest benchmark.

There is no error being thrown either by glgeterror or the opengl debug context.

When viewing in apitrace, the corruption is the same.

Any help/ leads would be appreciated.

The only similar problem I foudn was in unity but I cannot find any info regarding the tru source of the problem:

https://forum.unity.com/threads/graphical-corruption-in-2018-2.542646/?_ga=2.142022006.597464846.1595173589-307192312.1595173589