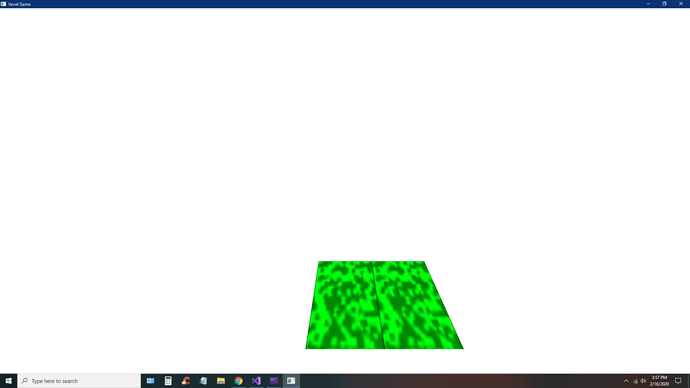

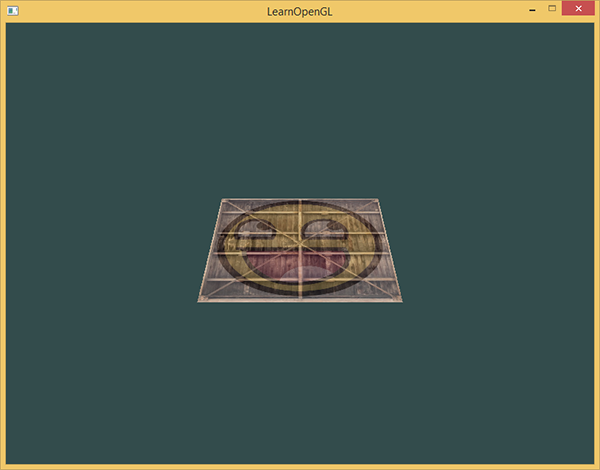

My program renders two squares side by side. I am trying to implement a projection matrix into the mix but it won’t work. When I multiply it by the vector in the vertex shader, I get nothing.

#include <iostream>

#include "glad/glad.h"

#include "GLFW/glfw3.h"

#include "glm/glm.hpp"

#include "glm/ext.hpp"

#include "glm/gtc/matrix_transform.hpp"

#include "SOIL.h"

#define out std::cout

#define end std::endl

const GLchar *vertexShaderSource = R"(

#version 330 core

layout (location = 0) in vec3 pos;

layout (location = 1) in vec2 coord;

out vec2 TexCoord;

uniform float aspect_ratio;

uniform mat4 model;

uniform mat4 view;

uniform mat4 projection;

void main()

{

gl_Position = projection * vec4(pos.x, pos.y, pos.z, 1.0);

TexCoord = coord;

}

)";

const GLchar *fragmentShaderSource = R"(

#version 330 core

in vec2 TexCoord;

out vec4 Color;

uniform sampler2D ourTexture;

void main()

{

//Color = vec4(1.0, 0.5, 0.2, 1.0);

Color = texture(ourTexture, TexCoord);

}

)";

void framebuffer_size_callback(GLFWwindow*, GLint, GLint);

int render(GLFWwindow*, GLuint, GLuint);

void buffer(GLuint&, GLuint&, GLuint&, float [], unsigned int []);

void shader(GLuint, GLuint);

void processInput(GLFWwindow*);

GLuint shaderProgram;

/* Flow

main -> render -> shader -> processInputs

*/

int main()

{

glfwInit();

glfwWindowHint(GLFW_CONTEXT_VERSION_MAJOR, 3);

glfwWindowHint(GLFW_CONTEXT_VERSION_MINOR, 3);

glfwWindowHint(GLFW_OPENGL_PROFILE, GLFW_OPENGL_CORE_PROFILE);

glfwWindowHint(GLFW_RESIZABLE, GL_TRUE);

GLFWwindow *window = glfwCreateWindow(1280, 720, "Voxel Game", NULL, NULL);

if (!window)

{

out << "Failed to create GLFW window" << end;

glfwTerminate();

return -1;

}

else

{

glfwMakeContextCurrent(window);

glfwSetFramebufferSizeCallback(window, framebuffer_size_callback);

}

gladLoadGL();

if (!gladLoadGLLoader((GLADloadproc)glfwGetProcAddress))

{

out << "Failed to initialize GLAD" << end;

return -1;

}

const GLFWvidmode* mode = glfwGetVideoMode(glfwGetPrimaryMonitor());

GLint window_width = mode->width;

GLint window_height = mode->height;

render(window, window_width, window_height);

return 0;

}

int render(GLFWwindow *window, GLuint window_width, GLuint window_height)

{

float vertices[] =

{

// positions // texture coords

0.2f, 0.2f, 0.0f, 1.0f, 1.0f, // top right

0.2f, -0.2f, 0.0f, 1.0f, 0.0f, // bottom right

-0.2f, -0.2f, 0.0f, 0.0f, 0.0f, // bottom left

-0.2f, 0.2f, 0.0f, 0.0f, 1.0f // top left

};

float vertices2[] =

{

// positions // texture coords

0.6f, 0.2f, 0.0f, 1.0f, 1.0f, // top right

0.6f, -0.2f, 0.0f, 1.0f, 0.0f, // bottom right

0.2f, -0.2f, 0.0f, 0.0f, 0.0f, // bottom left

0.2f, 0.2f, 0.0f, 0.0f, 1.0f // top left

};

unsigned int indices[] =

{

0, 1, 3, // first triangle

1, 2, 3 // second triangle

};

// Vertex Array, Vertex Buffer and Element Buffer

GLuint VAO[2], VBO[2], EBO[2];

glGenVertexArrays(2, VAO);

glGenBuffers(2, VBO);

glGenBuffers(2, EBO);

glBindVertexArray(VAO[0]);

glBindBuffer(GL_ARRAY_BUFFER, VBO[0]);

glBufferData(GL_ARRAY_BUFFER, sizeof(vertices), vertices, GL_STATIC_DRAW);

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, EBO[0]);

glBufferData(GL_ELEMENT_ARRAY_BUFFER, sizeof(indices), indices, GL_STATIC_DRAW);

glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE, 5 * sizeof(float), (void*)0);

glEnableVertexAttribArray(0);

glVertexAttribPointer(1, 2, GL_FLOAT, GL_FALSE, 5 * sizeof(float), (void*)(3 * sizeof(float)));

glEnableVertexAttribArray(1);

glBindVertexArray(VAO[1]);

glBindBuffer(GL_ARRAY_BUFFER, VBO[1]);

glBufferData(GL_ARRAY_BUFFER, sizeof(vertices2), vertices2, GL_STATIC_DRAW);

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, EBO[1]);

glBufferData(GL_ELEMENT_ARRAY_BUFFER, sizeof(indices), indices, GL_STATIC_DRAW);

glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE, 5 * sizeof(float), (void*)0);

glEnableVertexAttribArray(0);

glVertexAttribPointer(1, 2, GL_FLOAT, GL_FALSE, 5 * sizeof(float), (void*)(3 * sizeof(float)));

glEnableVertexAttribArray(1);

shader(window_width, window_height);

// Load Image

int width, height, channels;

unsigned char *image = SOIL_load_image("grass.png", &width, &height, &channels, SOIL_LOAD_RGB);

// Texture

unsigned int texture;

glGenTextures(1, &texture);

glBindTexture(GL_TEXTURE_2D, texture);

// Settings

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_BORDER);

// Texture Image

if (image)

{

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGB, width, height, 0, GL_RGB, GL_UNSIGNED_BYTE, image);

glGenerateMipmap(GL_TEXTURE_2D);

}

else

{

out << "Failed to load texture" << end;

}

SOIL_free_image_data(image);

// Render Loop

while (!glfwWindowShouldClose(window))

{

processInput(window);

glClearColor(1.0f, 1.0f, 1.0f, 1.0f);

glClear(GL_COLOR_BUFFER_BIT);

//glBindTexture(GL_TEXTURE_2D, texture);

//glUseProgram(shaderProgram);

glBindVertexArray(VAO[0]);

glDrawElements(GL_TRIANGLES, 6, GL_UNSIGNED_INT, 0); // 6 = Number of vertices (3 for each triangle)

glBindVertexArray(VAO[1]);

glDrawElements(GL_TRIANGLES, 6, GL_UNSIGNED_INT, 0);

glfwSwapBuffers(window);

glfwPollEvents();

}

glDeleteVertexArrays(2, VAO);

glDeleteBuffers(2, VBO);

glfwTerminate();

return 0;

}

void shader(GLuint window_width, GLuint window_height)

{

GLuint vertexShader = glCreateShader(GL_VERTEX_SHADER);

glShaderSource(vertexShader, 1, &vertexShaderSource, NULL);

glCompileShader(vertexShader);

GLuint fragmentShader = glCreateShader(GL_FRAGMENT_SHADER);

glShaderSource(fragmentShader, 1, &fragmentShaderSource, NULL);

glCompileShader(fragmentShader);

shaderProgram = glCreateProgram();

glAttachShader(shaderProgram, vertexShader);

glAttachShader(shaderProgram, fragmentShader);

glLinkProgram(shaderProgram);

glUseProgram(shaderProgram);

GLuint location = glGetUniformLocation(shaderProgram, "aspect_ratio");

glUniform1f(location, window_width / (float)window_height);

glm::mat4 model = glm::mat4(1.0f); // make sure to initialize matrix to identity matrix first

glm::mat4 view = glm::mat4(1.0f);

glm::mat4 projection = glm::perspective(glm::radians(180.0f), window_width / (float)window_height, 0.1f, 100.0f);

// retrieve the matrix uniform locations

GLuint modelLoc = glGetUniformLocation(shaderProgram, "model");

GLuint viewLoc = glGetUniformLocation(shaderProgram, "view");

GLuint projectionLoc = glGetUniformLocation(shaderProgram, "projection");

// pass them to the shaders (3 different ways)

glUniformMatrix4fv(modelLoc, 1, GL_FALSE, glm::value_ptr(model));

glUniformMatrix4fv(viewLoc, 1, GL_FALSE, glm::value_ptr(view));

glUniformMatrix4fv(projectionLoc, 1, GL_FALSE, glm::value_ptr(projection));

glDeleteShader(vertexShader);

glDeleteShader(fragmentShader);

}

void processInput(GLFWwindow* window)

{

if (glfwGetKey(window, GLFW_KEY_ESCAPE) == GLFW_PRESS) glfwSetWindowShouldClose(window, true);

}

void framebuffer_size_callback(GLFWwindow* window, GLint width, GLint height)

{

glViewport(0, 0, width, height);

}