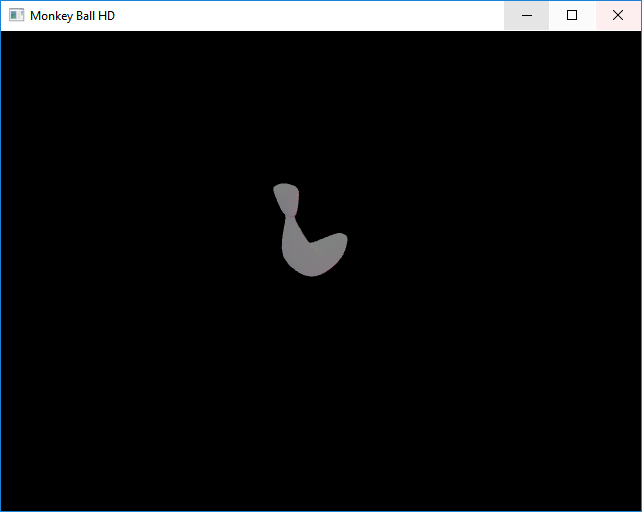

For some reason, my normals are getting transformed in a weird way. Here’s what it looks like when I output the normals as colors (scaling the -1 to 1 range to the 0 to 1 range of course):

Vertex shader:

#version 330 core

layout(location=0) in vec3 a_Position;

layout(location=1) in vec3 a_Normal;

layout(location=2) in vec4 a_Tangent;

layout(location=4) in vec2 a_UV;

layout(location=6) in ivec4 a_Joints;

layout(location=7) in vec4 a_Weights;

layout(std140) uniform u_VertexShaderData

{

mat4 u_Model;

mat4 u_View;

mat4 u_Projection;

mat4 u_NormalMtx;

mat4 u_ShadowBias;

mat4 u_Bones[28];

mat4 u_NormalBones[28];

};

out vec4 o_Position;

out vec4 o_Normal;

out vec4 o_Tangent;

out vec4 o_Bitangent;

out vec2 o_UV;

out vec4 o_ShadowCoord;

void main()

{

vec3 v_Position = a_Position;

vec3 v_Normal = a_Normal;

vec3 v_Tangent = a_Tangent.xyz;

vec3 v_Bitangent = cross(a_Normal,a_Tangent.xyz);

vec4 w_Position = vec4(v_Position,1.0);

vec4 w_Normal = vec4(v_Normal,0.0);

vec4 w_Tangent = vec4(v_Tangent,0.0);

vec4 w_Bitangent = vec4(v_Bitangent,0.0);

for(int i=0; i<4; i++)

{

mat4 m_Bone = u_Bones[a_Joints[i]];

mat4 m_NormalBone = u_NormalBones[a_Joints[i]];

v_Position += (m_Bone*w_Position).xyz*a_Weights[i];

v_Normal += (m_NormalBone*w_Normal).xyz*a_Weights[i];

v_Tangent += (m_NormalBone*w_Tangent).xyz*a_Weights[i];

v_Bitangent += (m_NormalBone*w_Bitangent).xyz*a_Weights[i];

}

mat4 m_Position = u_Projection*u_View*u_Model;

o_Normal = normalize(u_NormalMtx*vec4(v_Normal,0.0));

o_Tangent = normalize(u_NormalMtx*vec4(v_Tangent,0.0));

o_Tangent.w = a_Tangent.w;

o_Bitangent = normalize(u_NormalMtx*vec4(v_Bitangent,0.0));

o_UV = a_UV;

gl_Position = m_Position*vec4(v_Position,1.0);

o_ShadowCoord = u_ShadowBias*gl_Position;

}

Fragment shader:

#version 330 core

in vec4 o_Position;

in vec4 o_Normal;

in vec4 o_Tangent;

in vec4 o_Bitangent;

in vec2 o_UV;

in vec4 o_ShadowCoord;

const float u_EnvSize = 9.0;

const float u_IrrLod = 6.0;

uniform vec4 u_LightDirection;

uniform sampler2DShadow u_ShadowMap;

uniform samplerCube u_Radiance;

uniform sampler2D u_Albedo;

uniform sampler2D u_Metalness;

uniform sampler2D u_NormalMap;

uniform sampler2D u_Roughness;

uniform sampler2D u_Emission;

out vec4 o_FragColor;

vec3 sampleHDR(vec4 c)

{

return vec3(c.rgb*exp2((c.a*255.0)-128.0));

}

float sampleShadow()

{

return 1.0;

//float bias = 0.005*tan(acos(dot(o_Normal,normalize(-u_LightDirection))));

//bias = clamp(bias,0.0,0.01);

//return 0.5+0.5*step(o_ShadowCoord.z-bias/o_ShadowCoord.w,texture(u_ShadowMap,o_ShadowCoord.xyz));

}

void main()

{

vec4 v_Albedo = texture(u_Albedo,o_UV);

vec4 v_NormalMap = (texture(u_NormalMap,o_UV)*2.0)-vec4(1.0);

v_NormalMap.b = sqrt(1.0-dot(v_NormalMap.rg,v_NormalMap.rg));

vec4 v_Normal = (vec4(o_Tangent.xyz,0.0)*v_NormalMap.r);

v_Normal += (o_Bitangent*v_NormalMap.g);

v_Normal = normalize(v_Normal+(o_Normal*v_NormalMap.b))*o_Tangent.w;

vec4 v_Reflect = reflect(v_Normal,vec4(0.0,0.0,-1.0,0.0));

float f_Roughness = u_EnvSize*texture(u_Roughness,o_UV).r;

float f_Metalness = texture(u_Metalness,o_UV).r;

vec3 v_Emission = texture(u_Emission,o_UV).rgb;

float f_Emission = (0.3*v_Emission.r)+(0.6*v_Emission.g)+(0.1*v_Emission.b);

vec3 v_Specular = vec3(1.0);

//vec3 v_Specular = vec3(max(0.0,pow(dot(v_Reflect,vec4(0.577,0.577,0.577,0.0)),4.0+(44.0*f_Roughness))));

//vec3 v_Specular = sampleHDR(textureLod(u_Radiance,v_Reflect.xyz,f_Roughness));

vec3 v_Diffuse = vec3(0.5);

//vec3 v_Diffuse = vec3(0.5*max(0.0,dot(v_Normal,vec4(0.577,0.577,0.577,0.0))));

//vec3 v_Diffuse = sampleHDR(textureLod(u_Radiance,v_Normal.xyz,u_IrrLod));

float f_Shadow = sampleShadow();

float f_Fresnel = 0.04+0.96*pow(max(0.0,1.0-dot(v_Normal,vec4(0.0,0.0,-1.0,0.0))),5.0);

vec3 v_Metal0 = v_Albedo.rgb*v_Diffuse;

vec3 v_Metal1 = v_Albedo.rgb*v_Specular;

vec3 resultC = (f_Shadow*mix(mix(v_Metal0,v_Metal1,f_Metalness),v_Specular,f_Fresnel))+v_Emission;

float resultA = mix(v_Albedo.a,1.0,f_Fresnel)+f_Emission;

o_FragColor = vec4((o_Normal.xyz+vec3(1.0))/2.0,1.0);

}

u_NormalMtx is calculated as so:

vsd.u_NormalMtx = glm::transpose(glm::inverse(vsd.u_View*vsd.u_Model));

where vsd is the struct used to pass the interface block to the shader.

EDIT

Here’s how the view and projection matrices are set up:

vsd.u_View = glm::lookAt(glm::vec3(0.0f,50.0f,50.0f),glm::vec3(0.0f),glm::vec3(0.0f,1.0f,0.0f));

vsd.u_Projection = glm::perspectiveFov(2.0f,640.0f,480.0f,0.1f,1000.0f);

And here’s the model position:

objBuffer[0].Position = glm::vec3(0.0f,0.0f,2.0f);

objBuffer[0].Rotation = glm::angleAxis(0.785398f,glm::vec3(0.0f,1.0f,0.0f));

objBuffer[0].Scale = glm::vec3(50.0f);

Finally, here’s how the model matrix is calculated from the position (this is inside a loop, hence the i):

vsd.u_Model = glm::translate(glm::mat4(),objBuffer[i].Position);

vsd.u_Model *= glm::toMat4(objBuffer[i].Rotation);

vsd.u_Model *= glm::scale(glm::mat4(),objBuffer[i].Scale);

EDIT 2

Here’s the vsd struct in full:

typedef struct {

glm::mat4 u_Model;

glm::mat4 u_View;

glm::mat4 u_Projection;

glm::mat4 u_NormalMtx;

glm::mat4 u_ShadowBias;

glm::mat4 u_Bones[28];

glm::mat4 u_NormalBones[28];

} VertexShaderData;

VertexShaderData vsd;