[First post]

Hi all,

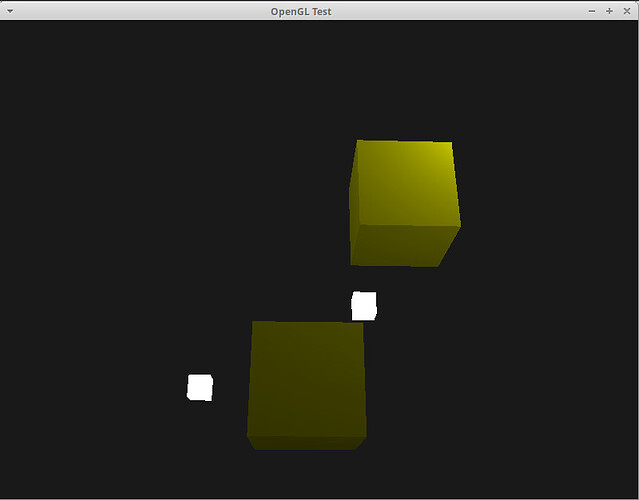

I’m having this weird issue with my scene where all the lights appear on the upper-right corner of my second cube. That’s what I can see at least, I’m not even sure if that’s the case.

So, I have two cubes (referred to as “objects” in my code), three lights.

In my fragment shader, the lights are an array of Light structs.

In my C++ code, the position, model, light properties, material properties are passed to the shader (via glUniformXXX()).

And there’s another issue, the third light won’t even show up.

I’m on Linux, and my code is mainly C++ but with a bit of C. Here’s some of the code:

Fragment shader (cubes):

#version 330 core

out vec4 FragColor;

struct Material {

vec3 ambient;

vec3 diffuse;

vec3 specular;

float shininess;

};

struct Light {

vec3 position;

vec3 ambient;

vec3 diffuse;

vec3 specular;

float constant;

float linear;

float quadratic;

};

Light light;

in vec3 FragPos;

in vec3 Normal;

in vec2 TexCoords;

uniform vec3 viewPos;

uniform Material material;

uniform Light lights[3];

vec3 CalcPointLight(Light light, vec3 normal, vec3 fragPos, vec3 viewDir) {

vec3 lightDir = normalize(light.position - fragPos);

float diff = max(dot(normal, lightDir), 0.0);

vec3 reflectDir = reflect(-lightDir, normal);

float spec = pow(max(dot(viewDir, reflectDir), 0.0), material.shininess);

float distance = length(light.position - fragPos);

float attenuation = 1.0 / (light.constant + light.linear * distance + light.quadratic * (distance * distance));

vec3 ambient = light.ambient * material.ambient;

vec3 diffuse = light.diffuse * diff * material.diffuse;

vec3 specular = light.specular * spec * material.specular;

ambient *= attenuation;

diffuse *= attenuation;

specular *= attenuation;

return (ambient + diffuse + specular);

}

void main() {

vec3 norm = normalize(Normal);

vec3 viewDir = normalize(viewPos - FragPos);

vec3 result = CalcPointLight(lights[0], norm, FragPos, viewDir)*lights[0].constant;

result += CalcPointLight(lights[1], norm, FragPos, viewDir)*lights[1].constant;

result += CalcPointLight(lights[2], norm, FragPos, viewDir)*lights[2].constant;

/*for(int i = 0; i < lights.length(); i++)

result += CalcPointLight(lights[i], norm, FragPos, viewDir)*lights[i].constant;*/

// the results are the same anyways

FragColor = vec4(result, 1.0);

}

Light creation/rendering code:

#include "scene/light.hpp"

#include <stdio.h>

int light = 0;

char *light_prop(const char *attribute) {

static char result[20];

sprintf(result, "lights[%d].%s", light, attribute);

return result;

}

GLuint create_light(glm::vec3 position, GLuint *objects, glm::vec3 view_pos, glm::mat4 projection, glm::mat4 view, glm::mat4 model) {

GLuint program = create_program("./dat/shaders/light.vs", "./dat/shaders/light.fs");

model = glm::translate(model, position);

for (int i = 0; i < sizeof(objects)/sizeof(GLuint); i++) {

glUseProgram(objects[i]);

glUniform3fv(glGetUniformLocation(objects[i], light_prop("position")), 1, glm::value_ptr(position));

glUniform3fv(glGetUniformLocation(objects[i], light_prop("ambient")), 1, glm::value_ptr(glm::vec3(1, 1, 1)));

glUniform3fv(glGetUniformLocation(objects[i], light_prop("diffuse")), 1, glm::value_ptr(glm::vec3(1, 1, 1)));

glUniform3f(glGetUniformLocation(objects[i], light_prop("specular")), 0.1f, 0.1f, 0.1f);

glUniform1f(glGetUniformLocation(objects[i], light_prop("constant")), 0.1f);

glUniform1f(glGetUniformLocation(objects[i], light_prop("linear")), 0.2f);

glUniform1f(glGetUniformLocation(objects[i], light_prop("quadratic")), 0.032f);

}

glUseProgram(program);

glUniform3fv(glGetUniformLocation(program, "selfColor"), 1, glm::value_ptr(glm::vec3(1.0f)));

glUniformMatrix4fv(glGetUniformLocation(program, "model"), 1, GL_FALSE, glm::value_ptr(model));

light += 1;

return program;

}

void render_lights(GLuint *lights, unsigned int vao) {

for (int i = 0; i < sizeof(lights)/sizeof(GLuint); i++) {

glUseProgram(lights[i]);

glBindVertexArray(vao);

glDrawArrays(GL_TRIANGLES, 0, 36);

}

}

Where I have an array of create_lights(), and render_lights() is called in my main loop.

Help would be greatly appreciated!

Thanks. ![]()