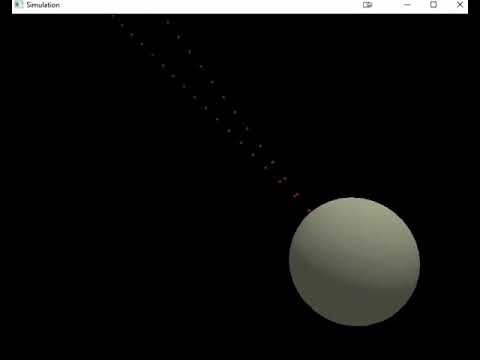

I am working on a 3D simulation of photons hitting an object with a spherical shape. Here is the video:

The problem is that the spacing between the flying photons is too large, and when I increase the number of them even more, the animation starts to stutter. Everything runs from the CPU. Can I somehow improve performance? Maybe by using compute shaders or CUDA?

In my rendering loop I’m calling the “render” method:

while (!glfwWindowShouldClose(window))

{

...

simulation.render();

...

}

Simulation::render() looks like this:

void Simulation::render()

{

for (size_t i = 0; i < number_of_particles; ++i)

powder_particles[i].draw(*renderer_sphere);

for (size_t i = 0; i < number_of_photons; ++i)

photons[i].draw(*renderer_photon);

}

The number of photons in the photons[] array is in the order of several thousand. Is it possible to use parallel computing on the graphics card instead of executing this loop sequentially on the processor?

Here is the “draw” method:

void Object_sphere::draw(SpriteRenderer_sphere& renderer)

{

renderer.drawSprite(position, size, rotation, color);

}

And here is the “drawSprite” method:

void SpriteRenderer_sphere::drawSprite(glm::vec3 position, glm::vec3 size, float rotate, glm::vec3 color)

{

shader.use();

glm::mat4 projection = glm::perspective(glm::radians(camera.Zoom), static_cast<float>(SCR_WIDTH) / static_cast<float>(SCR_HEIGHT), 0.1f, 100.0f);

glm::mat4 view = camera.GetViewMatrix();

shader.setMat4("view", view);

shader.setMat4("projection", projection);

glm::mat4 model = glm::mat4(1.0f);

model = glm::translate(model, position);

model = glm::translate(model, glm::vec3(0.5f * size.x, 0.5f * size.y, 0.5f * size.z)); // move origin of rotation to center of quad

model = glm::rotate(model, glm::radians(rotate), glm::vec3(0.0f, 0.0f, 1.0f)); // then rotate

model = glm::translate(model, glm::vec3(-0.5f * size.x, -0.5f * size.y, -0.5f * size.z)); // move origin back

model = glm::scale(model, glm::vec3(size));

shader.setMat4("model", model);

shader.setVec3f("spriteColor", color);

glm::mat3 normalMatrix = transpose(inverse(view * model));

shader.setMat3("normalMatrix", normalMatrix);

glBindVertexArray(VAO);

glDrawElements(GL_TRIANGLES, indices.size(), GL_UNSIGNED_INT, 0);

glBindVertexArray(0);

}

There is no interaction between photons.

Is it possible to run this code on the GPU?