Hello !

I have started studying OpenGL one week ago and using it with OpenTK NET frameworks.

I have a 7 years experience in programming, 3D data & computing.

My problem is particular, I have found “kind of” similar topics in this forum (2 topics) but either the closing answer was not answering my problem at all, either the problem was too different from mine.

I am using a custom VertexBuffer interpretating data from a byte array for personnal purposes.

GL.BindVertexArray(VertexArrayObject);

GL.BindBuffer(BufferTarget.ArrayBuffer, VertexBufferObject);

GL.BufferData(BufferTarget.ArrayBuffer, this.Data.Length, this.Data, BufferUsageHint.StaticDraw);

GL.VertexAttribPointer(0, 3, VertexAttribPointerType.Float, false, this.VertexStride, 0);

GL.EnableVertexAttribArray(0);

GL.VertexAttribIPointer(1, 1, VertexAttribIntegerType.Int, this.VertexStride, (IntPtr)(3 * sizeof(float)));

GL.EnableVertexAttribArray(1);

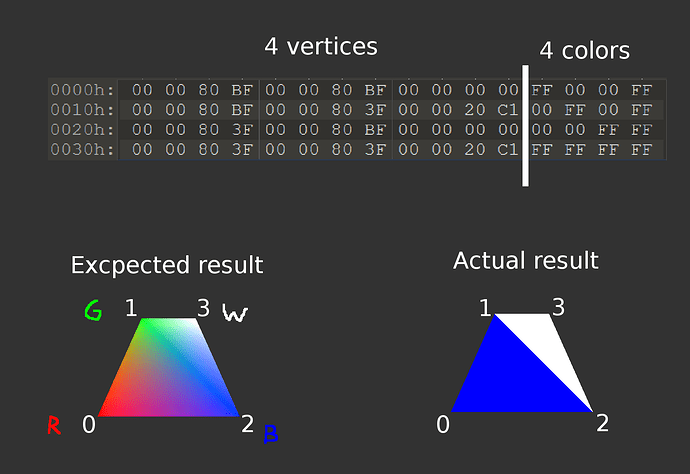

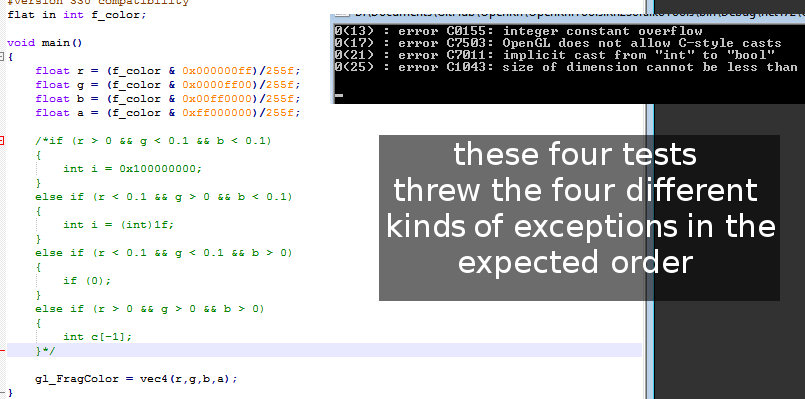

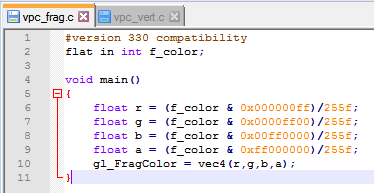

The images explain everything. The triangle is not displaying as expected, while the fragColor is set correctly, four times, with the correct colors, at the expected order.

It looks like only the color set at the moment the triangle strip draws a triangle, appears.

I’ve been looking for an anwer for a while now, I’de be really glad if a benevolent mind could help me figure the issue.

Thank you !

htttp://www.soraiko.ovh/Links/1805202012311.png