Hi all!

I have an strange issue, while migrating my code from OpenGL3.3 to DSA buffers, which i think is related to data alignment in the shaders.

Let me summarize:

I have the following VS (fragment is not really relevant as of now), its a simple shader that draws an animated 3D model with Bones data.

#version 330 core

#define BONES_PER_VERTEX 4

#define BONES_SUPPORT

layout (location = 0) in vec3 Position;

layout (location = 1) in vec3 Normal;

layout (location = 2) in vec2 aTexCoords;

layout (location = 3) in vec3 Tangent;

layout (location = 4) in vec3 BiTangent;

layout (location = 5) in uint BoneIDs[];

layout (location = 6) in float Weights[];

const int MAX_BONES = 100;

uniform mat4 gBones[MAX_BONES];

uniform mat4 model;

uniform mat4 view;

uniform mat4 projection;

out vec2 TexCoords;

void main()

{

TexCoords = aTexCoords;

vec4 Pos = vec4(Position, 1.0);

#ifdef BONES_SUPPORT

mat4 BoneTransform = gBones[BoneIDs[0]] * Weights[0];

BoneTransform += gBones[BoneIDs[1]] * Weights[1];

BoneTransform += gBones[BoneIDs[2]] * Weights[2];

BoneTransform += gBones[BoneIDs[3]] * Weights[3];

Pos = BoneTransform * Pos;

#endif

gl_Position = projection * view * model * Pos;

}

The data source comes from this data structure:

#define NUM_BONES_PER_VERTEX 4 // Number of Bones per Vertex

struct VertexBoneData

{

GLuint IDs[NUM_BONES_PER_VERTEX];

GLfloat Weights[NUM_BONES_PER_VERTEX];

VertexBoneData() { Reset(); }

void Reset()

{

for (unsigned int i = 0; i < NUM_BONES_PER_VERTEX; ++i)

{

IDs[i] = 0;

Weights[i] = 0;

}

}

void AddBoneData(unsigned int BoneID, float Weight);

};

struct Vertex {

glm::vec3 Position = { 0.0f, 0.0f, 0.0f };

glm::vec3 Normal = { 0.0f, 0.0f, 0.0f };

glm::vec2 TexCoords = { 0.0f, 0.0f };

glm::vec3 Tangent = { 0.0f, 0.0f, 0.0f };

glm::vec3 Bitangent = { 0.0f, 0.0f, 0.0f };

VertexBoneData Bone;

};

And, the “old” OpenGL code (opengl 3.X), used to work fine, with this buffer creation:

// Create VAO

glGenVertexArrays(1, &m_VAO);

glBindVertexArray(m_VAO);

// Load data into vertex buffers

glGenBuffers(1, &m_vertexBuffer);

glBindBuffer(GL_ARRAY_BUFFER, m_vertexBuffer);

glBufferData(GL_ARRAY_BUFFER, m_vertices.size() * sizeof(Vertex), &m_vertices[0], GL_STATIC_DRAW);

// Load data into index buffers (element buffer)

glGenBuffers(1, &m_indicesBuffer);

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, m_indicesBuffer);

glBufferData(GL_ELEMENT_ARRAY_BUFFER, m_indices.size() * sizeof(unsigned int), &m_indices[0], GL_STATIC_DRAW);

// Set the vertex attribute pointers

// vertex Positions

glEnableVertexAttribArray(POSITION_LOCATION);

glVertexAttribPointer(POSITION_LOCATION, 3, GL_FLOAT, GL_FALSE, sizeof(Vertex), (void*)0);

// vertex normals

glEnableVertexAttribArray(NORMAL_LOCATION);

glVertexAttribPointer(NORMAL_LOCATION, 3, GL_FLOAT, GL_FALSE, sizeof(Vertex), (void*)offsetof(Vertex, Normal));

// vertex texture coords

glEnableVertexAttribArray(TEX_COORD_LOCATION);

glVertexAttribPointer(TEX_COORD_LOCATION, 2, GL_FLOAT, GL_FALSE, sizeof(Vertex), (void*)offsetof(Vertex, TexCoords));

// vertex tangent

glEnableVertexAttribArray(TANGENT_LOCATION);

glVertexAttribPointer(TANGENT_LOCATION, 3, GL_FLOAT, GL_FALSE, sizeof(Vertex), (void*)offsetof(Vertex, Tangent));

// vertex bitangent

glEnableVertexAttribArray(BITANGENT_LOCATION);

glVertexAttribPointer(BITANGENT_LOCATION, 3, GL_FLOAT, GL_FALSE, sizeof(Vertex), (void*)offsetof(Vertex, Bitangent));

// Bone Vertex ID's as Unsigned INT

glEnableVertexAttribArray(BONE_ID_LOCATION);

glVertexAttribIPointer(BONE_ID_LOCATION, 4, GL_UNSIGNED_INT, sizeof(Vertex), (void*)(offsetof(Vertex, Bone) + offsetof(VertexBoneData, IDs)));

// Bone Vertex Weights

glEnableVertexAttribArray(BONE_WEIGHT_LOCATION);

glVertexAttribPointer(BONE_WEIGHT_LOCATION, 4, GL_FLOAT, GL_FALSE, sizeof(Vertex), (void*)(offsetof(Vertex, Bone) + offsetof(VertexBoneData, Weights)));

glBindVertexArray(0);

But now I have migrated the code above to OpenGL 4.5 with DSA, and some of the data is not properly passed to the shader. As far as I have seen the “BoneID’s” and “Weights” locations are not properly received in the shader, and I think it might be due to data packing.

The new buffer creation code is encapuslated in a Class that looks like:

#include "main.h"

#include "VertexArray.h"

static GLenum ShaderDataTypeToOpenGLBaseType(ShaderDataType type)

{

switch (type)

{

case ShaderDataType::Float: return GL_FLOAT;

case ShaderDataType::Float2: return GL_FLOAT;

case ShaderDataType::Float3: return GL_FLOAT;

case ShaderDataType::Float4: return GL_FLOAT;

case ShaderDataType::Int: return GL_INT;

case ShaderDataType::Int2: return GL_INT;

case ShaderDataType::Int3: return GL_INT;

case ShaderDataType::Int4: return GL_INT;

case ShaderDataType::Bool: return GL_BOOL;

}

Logger::error("Unknown ShaderDataType");

return 0;

}

VertexArray::VertexArray()

:

m_IndexBuffer(nullptr)

{

glCreateVertexArrays(1, &m_RendererID);

}

VertexArray::~VertexArray()

{

if (m_RendererID!=0)

glDeleteVertexArrays(1, &m_RendererID);

}

void VertexArray::Bind() const

{

glBindVertexArray(m_RendererID);

}

void VertexArray::Unbind() const

{

glBindVertexArray(0);

}

void VertexArray::AddVertexBuffer(VertexBuffer* vertexBuffer)

{

const auto& layout = vertexBuffer->GetLayout();

glVertexArrayVertexBuffer(m_RendererID, 0, vertexBuffer->GetBufferID(), 0, layout.GetStride());

for (const auto& element : layout)

{

switch (element.Type)

{

case ShaderDataType::Float:

case ShaderDataType::Float2:

case ShaderDataType::Float3:

case ShaderDataType::Float4:

{

glEnableVertexArrayAttrib(m_RendererID, m_VertexBufferIndex);

glVertexArrayAttribFormat(m_RendererID, m_VertexBufferIndex,

element.GetComponentCount(),

ShaderDataTypeToOpenGLBaseType(element.Type),

element.Normalized ? GL_TRUE : GL_FALSE,

element.Offset);

glVertexArrayAttribBinding(m_RendererID, m_VertexBufferIndex, 0); // remove Binding hardcoded to 0

m_VertexBufferIndex++;

break;

}

case ShaderDataType::Int:

case ShaderDataType::Int2:

case ShaderDataType::Int3:

case ShaderDataType::Int4:

case ShaderDataType::Bool:

{

glEnableVertexArrayAttrib(m_RendererID, m_VertexBufferIndex);

glVertexArrayAttribIFormat(m_RendererID, m_VertexBufferIndex,

element.GetComponentCount(),

ShaderDataTypeToOpenGLBaseType(element.Type),

element.Offset);

glVertexArrayAttribBinding(m_RendererID, m_VertexBufferIndex, 0); // remove Binding hardcoded to 0

m_VertexBufferIndex++;

break;

}

default:

Logger::error("Unknown ShaderDataType");

}

}

m_VertexBuffers.push_back(vertexBuffer);

glBindVertexArray(0);

}

void VertexArray::SetIndexBuffer(IndexBuffer* indexBuffer)

{

glVertexArrayElementBuffer(m_RendererID, indexBuffer->GetBufferID());

m_IndexBuffer = indexBuffer;

}

And the .h:

#include <memory>

#include "core/renderer/Buffer.h"

class VertexArray

{

public:

VertexArray();

virtual ~VertexArray();

virtual void Bind() const;

virtual void Unbind() const;

virtual void AddVertexBuffer(VertexBuffer* vertexBuffer);

virtual void SetIndexBuffer(IndexBuffer* indexBuffer);

virtual const std::vector<VertexBuffer*>& GetVertexBuffers() const { return m_VertexBuffers; }

virtual const IndexBuffer* GetIndexBuffer() const { return m_IndexBuffer; }

private:

uint32_t m_RendererID; // Our "VAO"

uint32_t m_VertexBufferIndex = 0;

std::vector<VertexBuffer*> m_VertexBuffers;

IndexBuffer* m_IndexBuffer;

};

And the VAO setup is done as follows:

// Allocate Vertex Array

m_VertexArray = new VertexArray();

// Create & Load the Vertex Buffer

VertexBuffer *vertexBuffer = new VertexBuffer(&m_vertices[0], static_cast<uint32_t>(m_vertices.size() * sizeof(Vertex)));

vertexBuffer->SetLayout({

{ ShaderDataType::Float3, "aPos"},

{ ShaderDataType::Float3, "aNormal"},

{ ShaderDataType::Float2, "aTexCoords"},

{ ShaderDataType::Float3, "aTangent"},

{ ShaderDataType::Float3, "aBiTangent"},

{ ShaderDataType::Int4, "aBoneID"},

{ ShaderDataType::Float4, "aBoneWeight"}

});

m_VertexArray->AddVertexBuffer(vertexBuffer);

// Create & Load the Index Buffer

IndexBuffer* indexBuffer = new IndexBuffer(&m_indices[0], static_cast<uint32_t>(m_indices.size()));

m_VertexArray->SetIndexBuffer(indexBuffer);

m_VertexArray->Unbind();

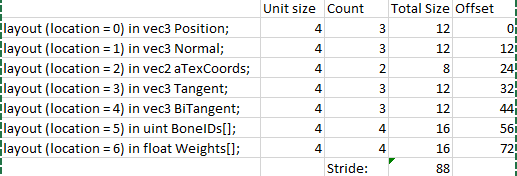

The Stride and offsets that I get are the following:

I think that maybe the Stride is wrong…, bit I don’t know how to calculate it properly… any clue?

Another important point to highlight is that in the “old” code I use to initialize the buffers as follows:

glBindBuffer(GL_ARRAY_BUFFER, m_RendererID);

glBufferData(GL_ARRAY_BUFFER, size, data, GL_STATIC_DRAW);

But in the new code, I’m using the DSA functions, like:

glNamedBufferStorage(m_RendererID, size, data, GL_MAP_READ_BIT);

Does it may have any impact?

Thanks in advance!!!