This is a try form a book:

void VolumetricFog::Load() {

pShaderProgram->CompileShader(VERTEX_SHADER, GLSLShader::VERTEX);

pShaderProgram->CompileShader(FRAGMENT_SHADER, GLSLShader::FRAGMENT);

pShaderProgram->Link();

// load noise 3D texture

int size = dim * dim * dim;

noise = new float[size];

std::random_device rd;

std::default_random_engine generator;

generator.seed(rd());

std::uniform_int_distribution<int> distribution(0, 255);

// Normalize

for (int i = 0; i < dim; i++)

for (int j = 0; j < dim; j++)

for (int k = 0; k < dim; k++)

noise[IX(i, j, k)] = distribution(generator) / 255.0f;

// Pass to OpenGL

glActiveTexture(GL_TEXTURE0);

glGenTextures(1, &textureID);

glBindTexture(GL_TEXTURE_3D, textureID);

// set the texture parameters

glTexParameteri(GL_TEXTURE_3D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_3D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_3D, GL_TEXTURE_WRAP_R, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_3D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_3D, GL_TEXTURE_MIN_FILTER, GL_LINEAR_MIPMAP_LINEAR);

//set the mipmap levels (base and max)

glTexParameteri(GL_TEXTURE_3D, GL_TEXTURE_BASE_LEVEL, 0);

glTexParameteri(GL_TEXTURE_3D, GL_TEXTURE_MAX_LEVEL, 4);

glTexImage3D(GL_TEXTURE_3D, 0, GL_RED, dim, dim, dim, 0, GL_RED, GL_FLOAT, noise);

//generate mipmaps

glGenerateMipmap(GL_TEXTURE_3D);

//setup unit cube vertex array and vertex buffer objects

GLuint cubeVBOID, cubeIndicesID;

glGenVertexArrays(1, &cubeVAOID);

glGenBuffers(1, &cubeVBOID);

glGenBuffers(1, &cubeIndicesID);

//unit cube vertices

glm::vec3 vertices[8] = { glm::vec3(-0.5f,-0.5f,-0.5f),

glm::vec3(0.5f,-0.5f,-0.5f),

glm::vec3(0.5f, 0.5f,-0.5f),

glm::vec3(-0.5f, 0.5f,-0.5f),

glm::vec3(-0.5f,-0.5f, 0.5f),

glm::vec3(0.5f,-0.5f, 0.5f),

glm::vec3(0.5f, 0.5f, 0.5f),

glm::vec3(-0.5f, 0.5f, 0.5f) };

//unit cube indices

GLushort cubeIndices[36] = { 0,5,4,

5,0,1,

3,7,6,

3,6,2,

7,4,6,

6,4,5,

2,1,3,

3,1,0,

3,0,7,

7,0,4,

6,5,2,

2,5,1 };

glBindVertexArray(cubeVAOID);

glBindBuffer(GL_ARRAY_BUFFER, cubeVBOID);

//pass cube vertices to buffer object memory

glBufferData(GL_ARRAY_BUFFER, sizeof(vertices), &(vertices[0].x), GL_STATIC_DRAW);

//enable vertex attributre array for position

glEnableVertexAttribArray(0);

glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE, 0, 0);

//pass indices to element array buffer

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, cubeIndicesID);

glBufferData(GL_ELEMENT_ARRAY_BUFFER, sizeof(cubeIndices), &cubeIndices[0], GL_STATIC_DRAW);

glBindVertexArray(0);

}

void VolumetricFog::Draw()

{

Camera* pCamera = Camera::GetInstance();

projection = pCamera->GetProjection();

view = pCamera->GetViewMatrix();

model = glm::mat4(1.f);

model = glm::translate(model, mPos);

glm::mat4 MVP = projection * view * model;

glEnable(GL_BLEND);

glBindVertexArray(cubeVAOID);

//bind the raycasting shader

pShaderProgram->Use();

//pass shader uniforms

pShaderProgram->SetUniform("volume", 0);

glActiveTexture(GL_TEXTURE0);

glBindTexture(GL_TEXTURE_3D, textureID);

pShaderProgram->SetUniform("MVP", MVP);

pShaderProgram->SetUniform("camPos", pCamera->Position);

pShaderProgram->SetUniform("step_size", 1.f / dim, 1.f / dim, 1.f / dim);

//render the cube

glDrawElements(GL_TRIANGLES, 36, GL_UNSIGNED_SHORT, 0);

//unbind the raycasting shader

//disable blending

glDisable(GL_BLEND);

}

int VolumetricFog::IX(int i, int j, int k)

{

return i + dim * j + dim * dim * k;

}

The code is pretty straight forward. First it creates noise 3D data stored in noise array and adds this data in a 3D texture. Then it generates a VAO of a cube. and then in the draw method it draws this cube.

vertex shader :

#version 330 core

layout(location = 0) in vec3 vVertex; //object space vertex position

//uniform

uniform mat4 MVP; //combined modelview projection matrix

smooth out vec3 vUV; //3D texture coordinates for texture lookup in the fragment shader

void main()

{

//get the clipspace position

gl_Position = MVP*vec4(vVertex.xyz, 1.f);

//get the 3D texture coordinates by adding (0.5,0.5,0.5) to the object space

//vertex position. Since the unit cube is at origin (min: (-0.5,-0.5,-0.5) and max: (0.5,0.5,0.5))

//adding (0.5,0.5,0.5) to the unit cube object space position gives us values from (0,0,0) to

//(1,1,1)

vUV = vVertex + vec3(0.5);

}

fragment shader:

#version 330 core

layout(location = 0) out vec4 vFragColor; //fragment shader output

smooth in vec3 vUV; //3D texture coordinates form vertex shader

//interpolated by rasterizer

//uniforms

uniform sampler3D volume; //volume dataset

uniform vec3 camPos; //camera position

uniform vec3 step_size; //ray step size

//constants

const int MAX_SAMPLES = 300; //total samples for each ray march step

const vec3 texMin = vec3(0); //minimum texture access coordinate

const vec3 texMax = vec3(1); //maximum texture access coordinate

void main()

{

//get the 3D texture coordinates for lookup into the volume dataset

vec3 dataPos = vUV;

//Getting the ray marching direction:

//get the object space position by subracting 0.5 from the

//3D texture coordinates. Then subtraact it from camera position

//and normalize to get the ray marching direction

vec3 geomDir = normalize((vUV-vec3(0.5)) - camPos);

//multiply the raymarching direction with the step size to get the

//sub-step size we need to take at each raymarching step

vec3 dirStep = geomDir * step_size;

//flag to indicate if the raymarch loop should terminate

bool stop = false;

//for all samples along the ray

for (int i = 0; i < MAX_SAMPLES; i++) {

// advance ray by dirstep

dataPos = dataPos + dirStep;

//The two constants texMin and texMax have a value of vec3(-1,-1,-1)

//and vec3(1,1,1) respectively. To determine if the data value is

//outside the volume data, we use the sign function. The sign function

//return -1 if the value is less than 0, 0 if the value is equal to 0

//and 1 if value is greater than 0. Hence, the sign function for the

//calculation (sign(dataPos-texMin) and sign (texMax-dataPos)) will

//give us vec3(1,1,1) at the possible minimum and maximum position.

//When we do a dot product between two vec3(1,1,1) we get the answer 3.

//So to be within the dataset limits, the dot product will return a

//value less than 3. If it is greater than 3, we are already out of

//the volume dataset

stop = dot(sign(dataPos-texMin),sign(texMax-dataPos)) < 3.0;

//if the stopping condition is true we brek out of the ray marching loop

if (stop)

break;

// data fetching from the red channel of volume texture

float sample = texture(volume, dataPos).r;

//Opacity calculation using compositing:

//here we use front to back compositing scheme whereby the current sample

//value is multiplied to the currently accumulated alpha and then this product

//is subtracted from the sample value to get the alpha from the previous steps.

//Next, this alpha is multiplied with the current sample colour and accumulated

//to the composited colour. The alpha value from the previous steps is then

//accumulated to the composited colour alpha.

float prev_alpha = sample - (sample * vFragColor.a);

vFragColor.rgb = prev_alpha * vec3(sample) + vFragColor.rgb;

vFragColor.a += prev_alpha;

//early ray termination

//if the currently composited colour alpha is already fully saturated

//we terminated the loop

if( vFragColor.a>0.99)

break;

}

}

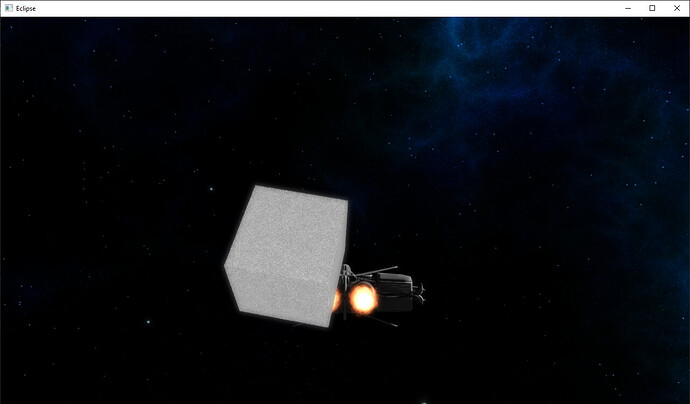

this cube on the picture is the result :

as expected there is a cube with noise as texture. I can not understand how the book (“OpenGL Development Cookbook”) expected to draw a volume with this setup. Am I missing something?