Hi there!

(This is a question based on OpenGLES but it seems that category is specific to OpenGLES issues and this category is for any general GLSL issue)

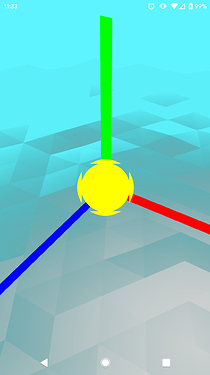

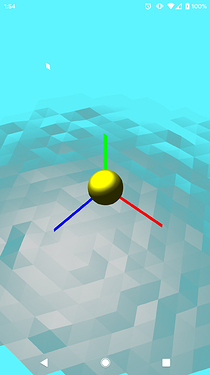

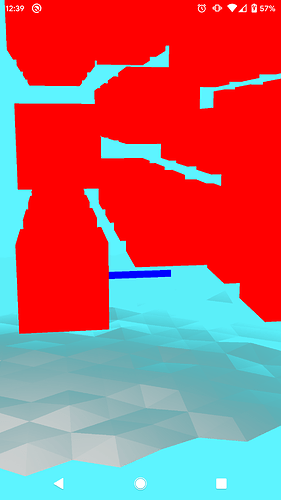

I want to draw non-polygonal objects, in this case simply spheres. My approach is to draw a box around the “camera” since there are no real polygons to draw for each individual object and to change the colour and depth of each fragment based on a ray that I cast out and intersect with the sphere. I currently have a method of finding the intersection point similar to this wikipedia article’s method on Line-Sphere intersection (I would provide a link but I am unable to do so at the moment):

// Fragment shader.

#version 320 es

precision mediump float;

// The dimensions of the sampler we use.

uniform uvec3 box_size;

// A sampler texture containing the sphere data.

// XYZ are the coordinates of each sphere and W is

// the radius.

uniform highp sampler3D points;

uniform mediump mat4x4 model;

uniform mediump mat4x4 view;

// This has to be highp since we use it in the vertex shader.

uniform highp mat4x4 projection;

// Scales the radius.

uniform float sphere_scale;

// The position of the box which we are processing.

in vec3 f_Pos;

out vec4 outColour;

void main() {

vec3 line = normalize(f_Pos);

// Whether the intersection was found or not.

bool worked = false;

// Loops over each of the spheres to try for an

// intersection. I will most definitely improve

// this as loops of this kind are slow.

for(uint i = 0u; i < box_size.x; i++) {

float p_x = float(i) / float(box_size.x);

for(uint j = 0u; j < box_size.y; j++) {

float p_y = float(j) / float(box_size.y);

for(uint k = 0u; k < box_size.z; k++) {

float p_z = float(k) / float(box_size.z);

// Acquire sphere data.

vec4 sphere = texture(points, vec3(p_x, p_y, p_z));

// Transform the sphere coordinates into world space.

vec3 center = (model * view * vec4(sphere.xyz, 1.0)).xyz;

float radius = sphere.w * sphere_scale;

// From here I go through a sphere-line intersection.

// Note that this assumes `o` from the wiki article is

// the origin since the eye remains at the origin and

// we transform the rest of the world around it.

vec3 lc = line * center;

float ll = dot(line, line);

float determinant = dot(lc * lc, vec3(1.0));

determinant -= ll * (dot(center, center) - radius * radius);

// If we got a hit...

if(determinant >= 0.0) {

worked = true;

// Find the actual intersection.

float sqr = sqrt(determinant);

// Negative intersection will be closer.

float dist_minus = (dot(lc, vec3(1.0)) - sqr) / ll;

// We've found distance so we have a point now.

vec4 point = vec4(line * dist_minus, 1.0);

// This is the point of this thread.

// How does one go about calculating this?

gl_FragDepth = (projection * point).w;

break;

}

}

}

}

// If we found an intersection then set colour.

if(worked) {

outColour = vec4(1., 1., 0., 1.);

}

// It seems that not assigning anything doesn't do anything...?

// Which is what we want; but somewhat unexpected.

}

In essence, I calculate a point of intersection (if there is one) and try to assign a new value to gl_FragDepth so that it will be processed as if it were at the right distance instead of the box’s position which is right up against the projection’s near plane.

If this is an XY problem please let me know; there may be a much easier way of drawing this.

I eventually want to do something like ray marching; hence my current attempt at a simple intersection.